The world of finance is plagued with a ton of paperwork and a large number of tiered human workers going through them. Lawyers, financial planners, and accountants are all working on the same problem, spending hours making sense of this plethora of data. This process is time-consuming and very costly.

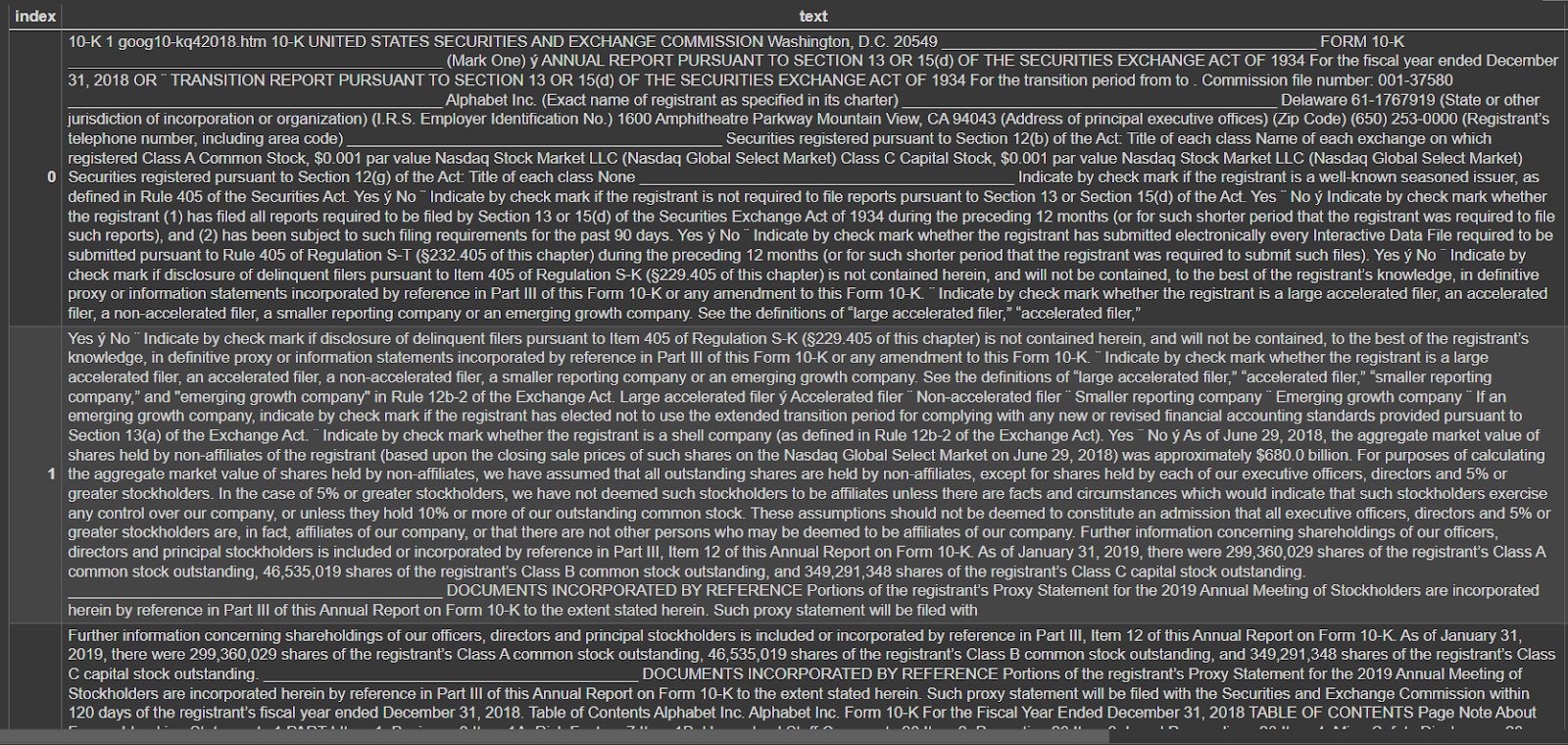

SEC filings are an excellent example of complicated documents in the finance industry. Every year thousands of companies file their forms and even more people spend a ton of time studying them to extract valuable information from them. These filings like Form 10-K are highly valuable for people like financial planners and analysts and fund managers. They use it to predict market movements and handpick the best stocks to invest in.

In this article, we are going to build an AI system that can analyze and extract valuable information from these forms.

NLP for Document Processing and Data Extraction

NLP has a lot of use cases in the finance world. As mentioned before, a large number of on-paper documents need to be processed, AI and NLP pipelines can be deployed to take care of these documents with ease.

Here are some use cases where AI and NLP can help:

Analyzing Financial Data

Financial Data is hard to analyze. Financial analysts spend a lot of time and resources on this. NLP techniques can be used to extract relevant information from large volumes of unstructured financial documents, such as annual reports, SEC filings, and news articles.

These techniques can help financial analysts quickly identify trends, insights, and risks in financial data, and make better-informed decisions.

In this article, we will build an AI system built on top of GPT-4 which is capable of analyzing large amounts of financial data.

Reducing Investment Risk

There are fund managers and risk analysts whose main responsibility is to analyze vast amounts of financial data to identify potential risks and opportunities. This is a huge problem in the space, this requires a lot of qualitative and quantitative data analysis.

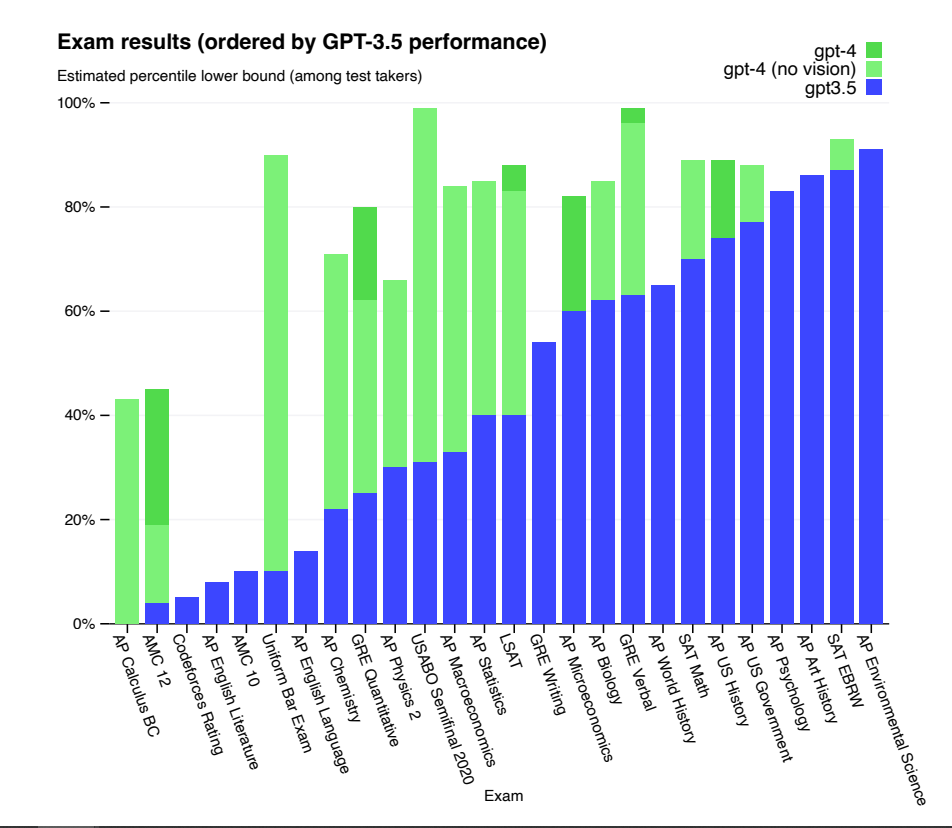

But recent advances have shown that we can use AI for this. GPT-4 Technical Report showed that it has “reasoning capabilities”. Meaning it can actually follow logical chains and is good at making decisions out of the box without any further prompt engineering and modifications.

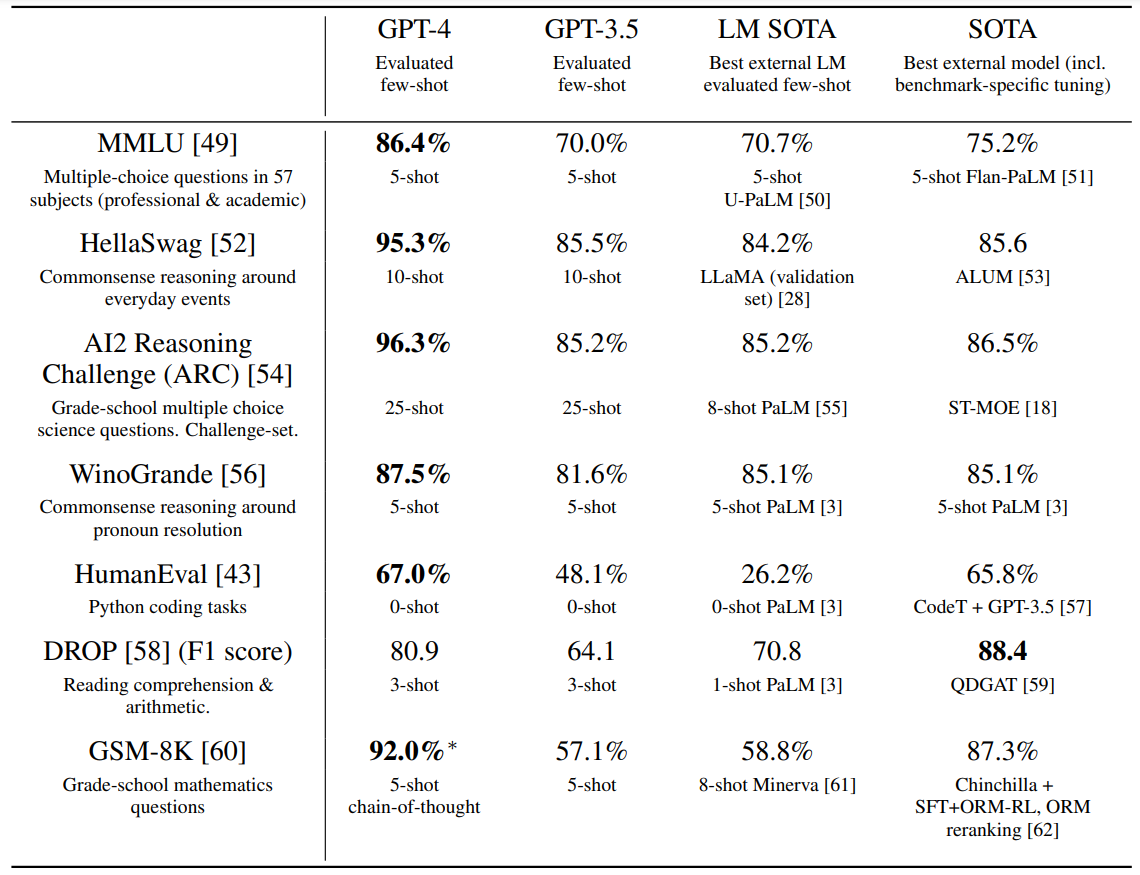

Here’s a chart depicting GPT-4’s performance on exams and tests.

It should be noted that OpenAI used specific datasets and techniques to enhance GPT’s reasoning capabilities. This table here shows GPT-4 performance on academic benchmarks. It achieved state-of-the-art performance on all of them.

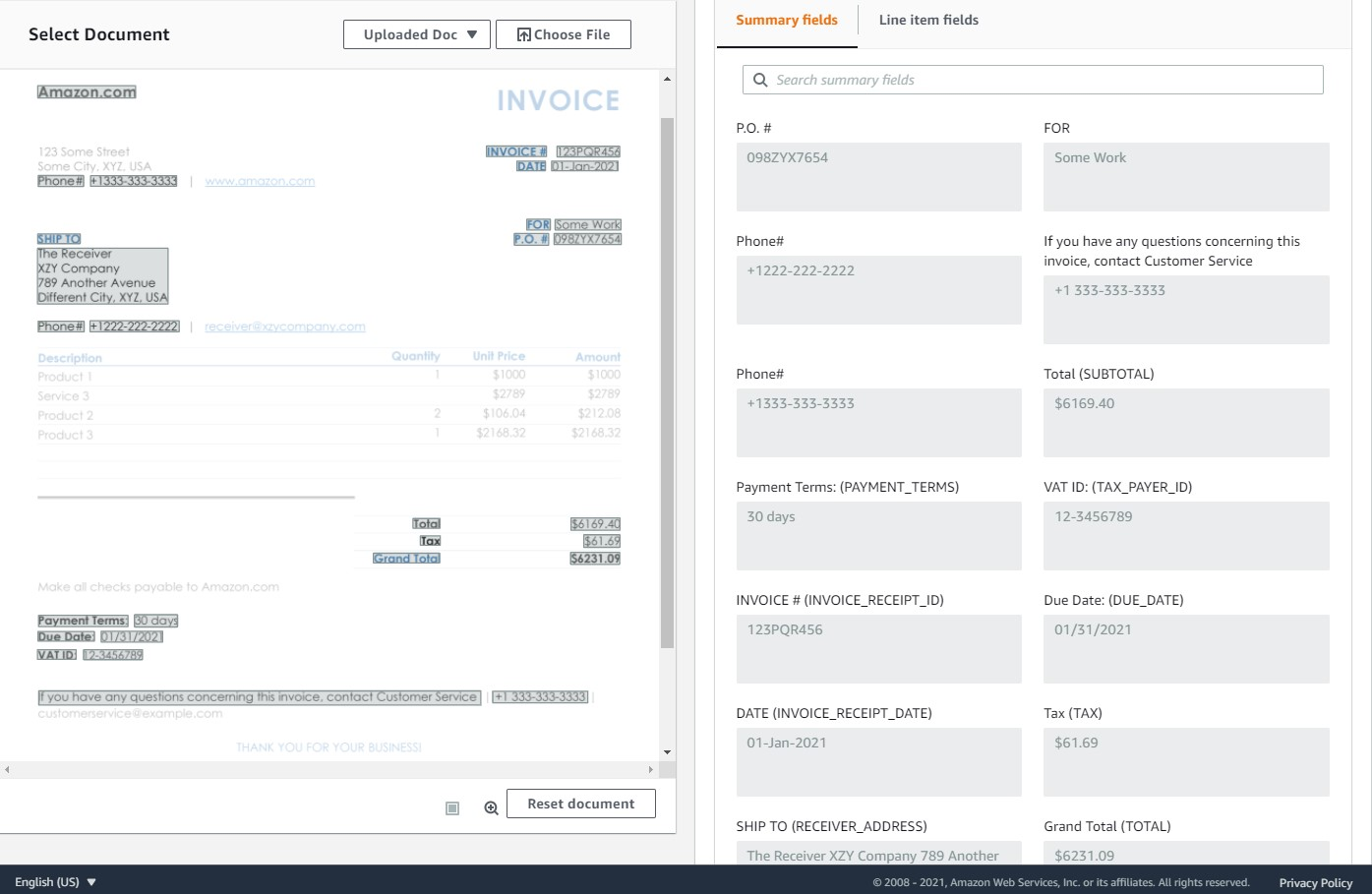

Extracting Data from Invoices

A big issue that most Accountants face is managing a large number of invoices. NLP can help extract relevant information from documents and digitize them within minutes.

AWS’s Textract can do invoice processing and extract key-value pairs from the documents. This data can then be used for further analysis.

Using GPT4 for Automated Data Extraction from SEC Filings

As mentioned before, in this tutorial we are going to build an AI system with GPT4 which is capable of extracting valuable data and answering questions from financial reports.

We can break such a process down into 4 specific steps:

- Data processing and Ingestion

- Data extraction from the documents

- Providing data to GPT4

- Ask GPT4 to analyze and provide answers

Let's go through these steps and write code for them.

Data pre-processing

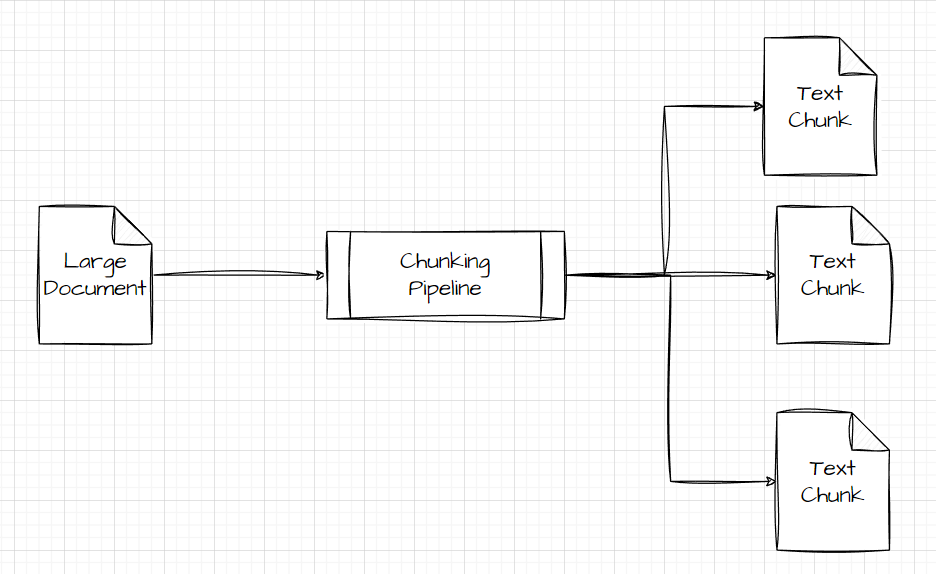

Data pre-processing is a crucial step here. SEC filings are massive, they have tens of thousands of words in them. ChatGPT can process up to 8K models, and GPT-4 has a model which can process 32K tokens, but it has not been released to the public at the time of writing this post.

To solve this problem, we will have to convert our large document into a smaller chunk of documents which can then be passed to GPT4 or ChatGPT to answer questions.

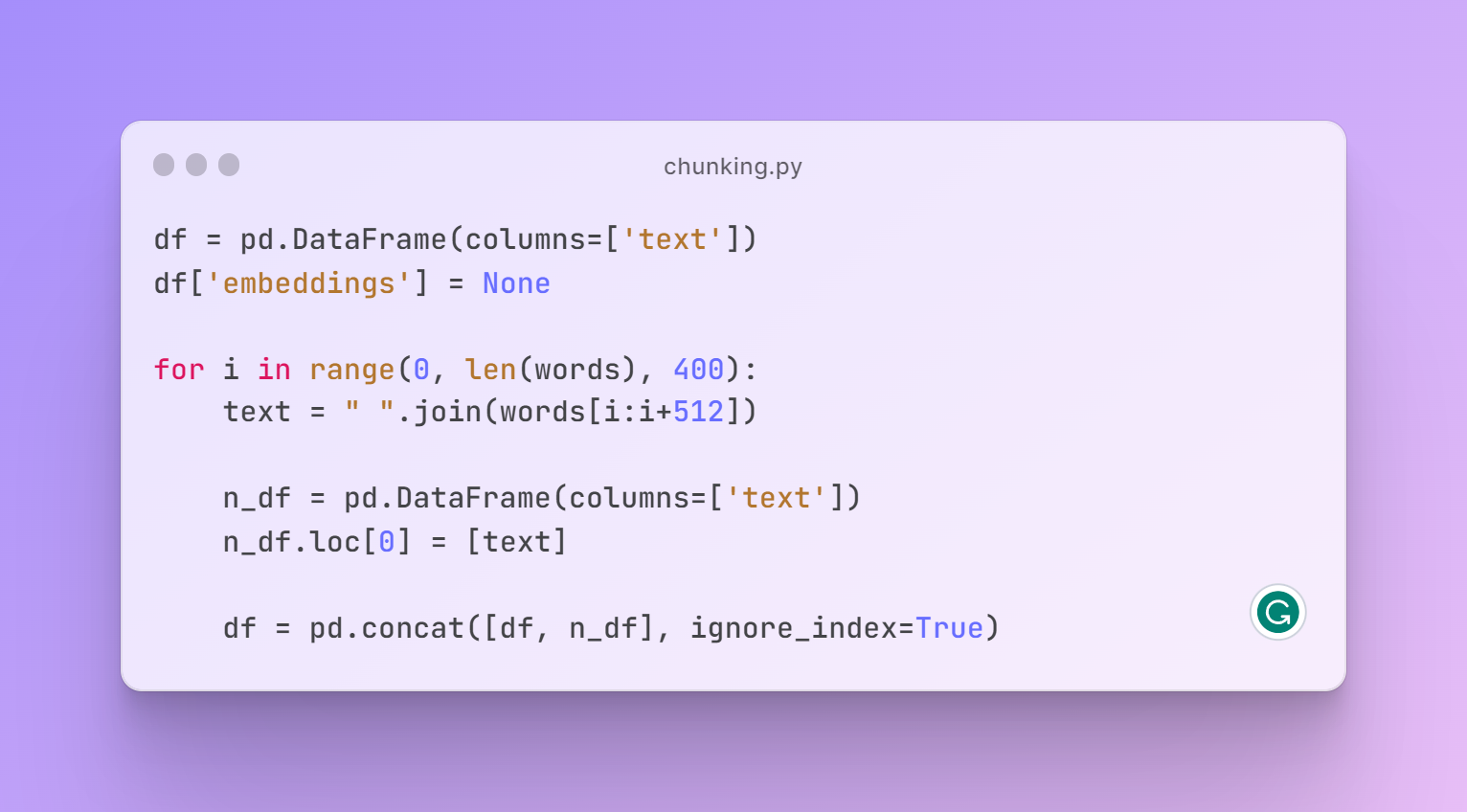

Here’s the code for conversion:

This code will take in a list of words and convert it into chunks of 512 words with an overlap of 112 words.

In the end, we can display the pandas data frame, this is what it will look like

If you look closely, you will see that some of the text is shared between the first and second chunks. This is intentional, this is done so that no chunk is accidentally made incomplete.

Data Extraction

Now that we have successfully transformed the data, we can use the chunks to answer user queries. But we still need to tackle the problem of finding the relevant chunks. That is the core of the issue.

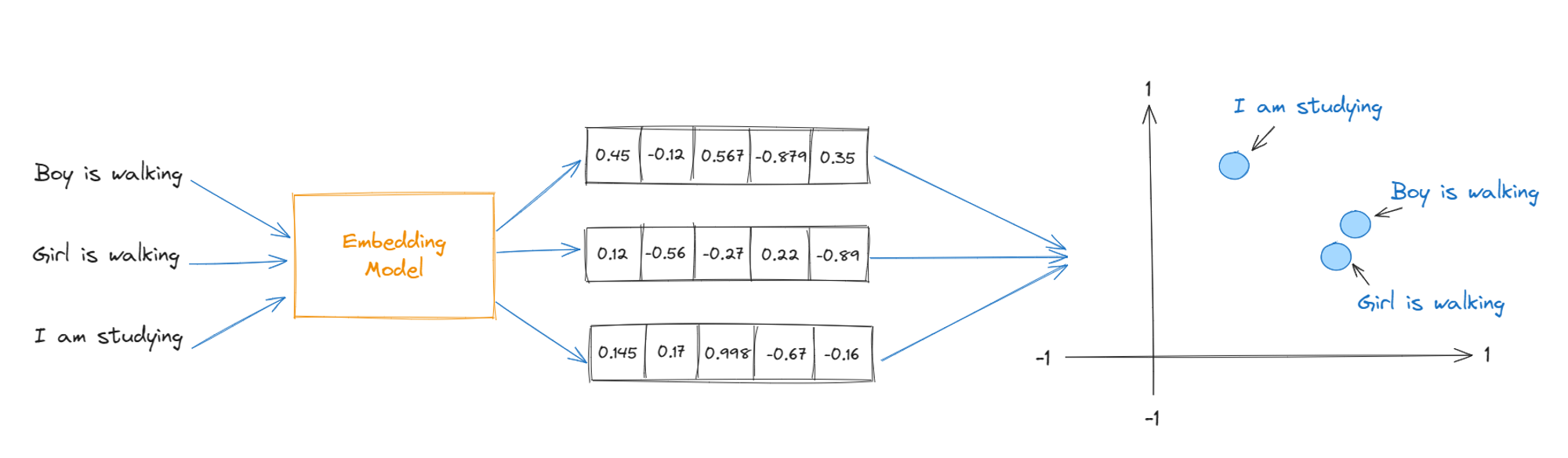

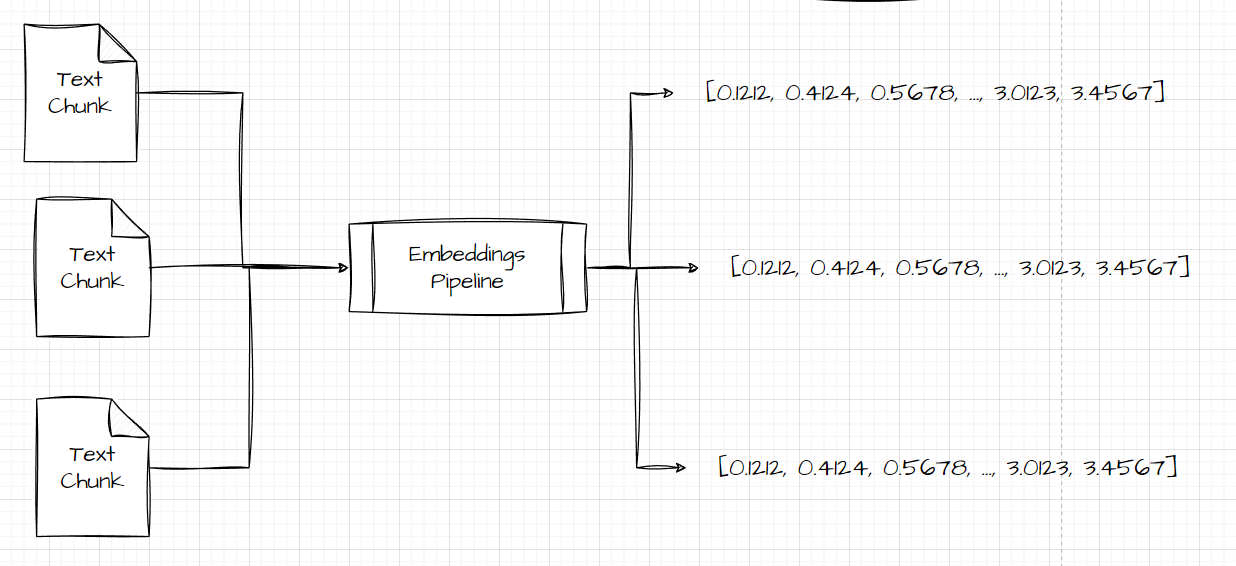

Once we have the relevant chunks we can then provide them to GPT4 to answer questions based on the chunks. To find relevant chunks of data we are going to use something called embedding vectors.

These embedding vectors contain semantic information about the text. These embedding vectors have a specific property: Text with similar meaning and similar context will have less vector distance, than pairs of text with different meaning and context. This property of vectors allows us to use them to find the relevant documents to answer the query.

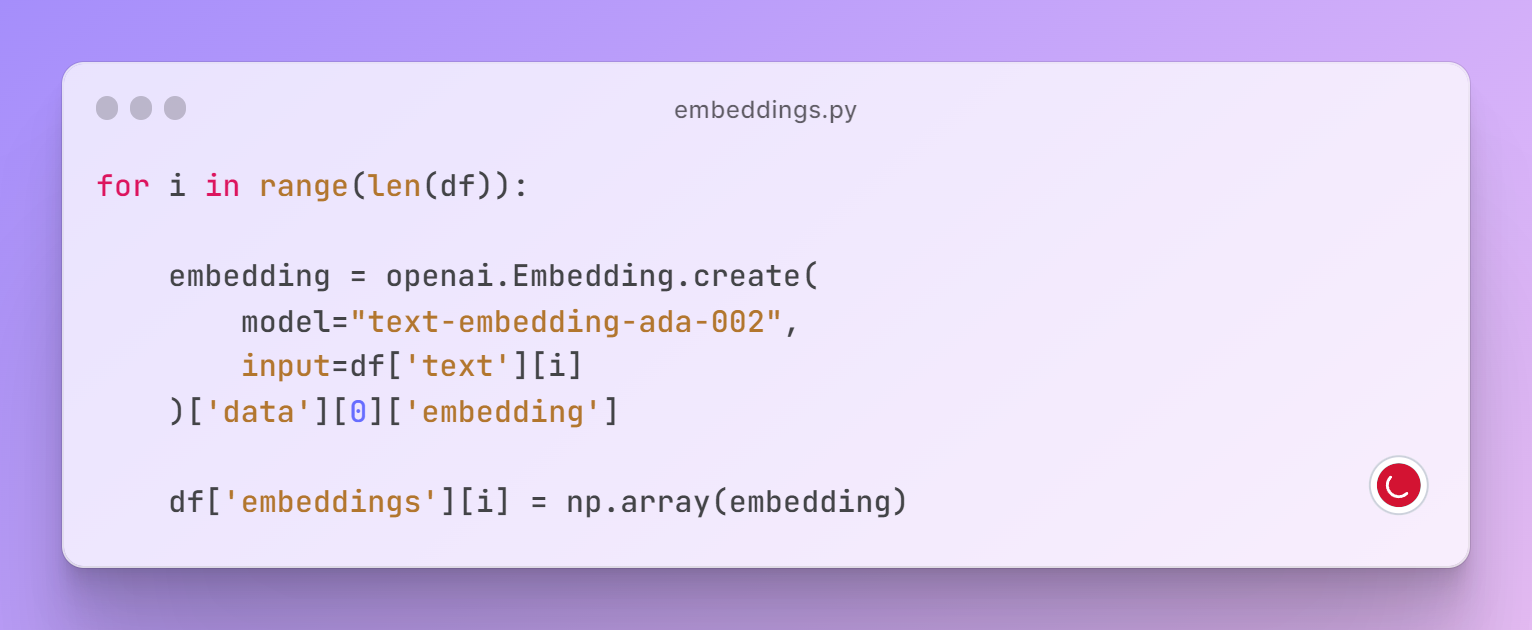

We are going to use OpenAI embeddings to get embeddings:

This will return a data frame of text chunks with their embeddings.

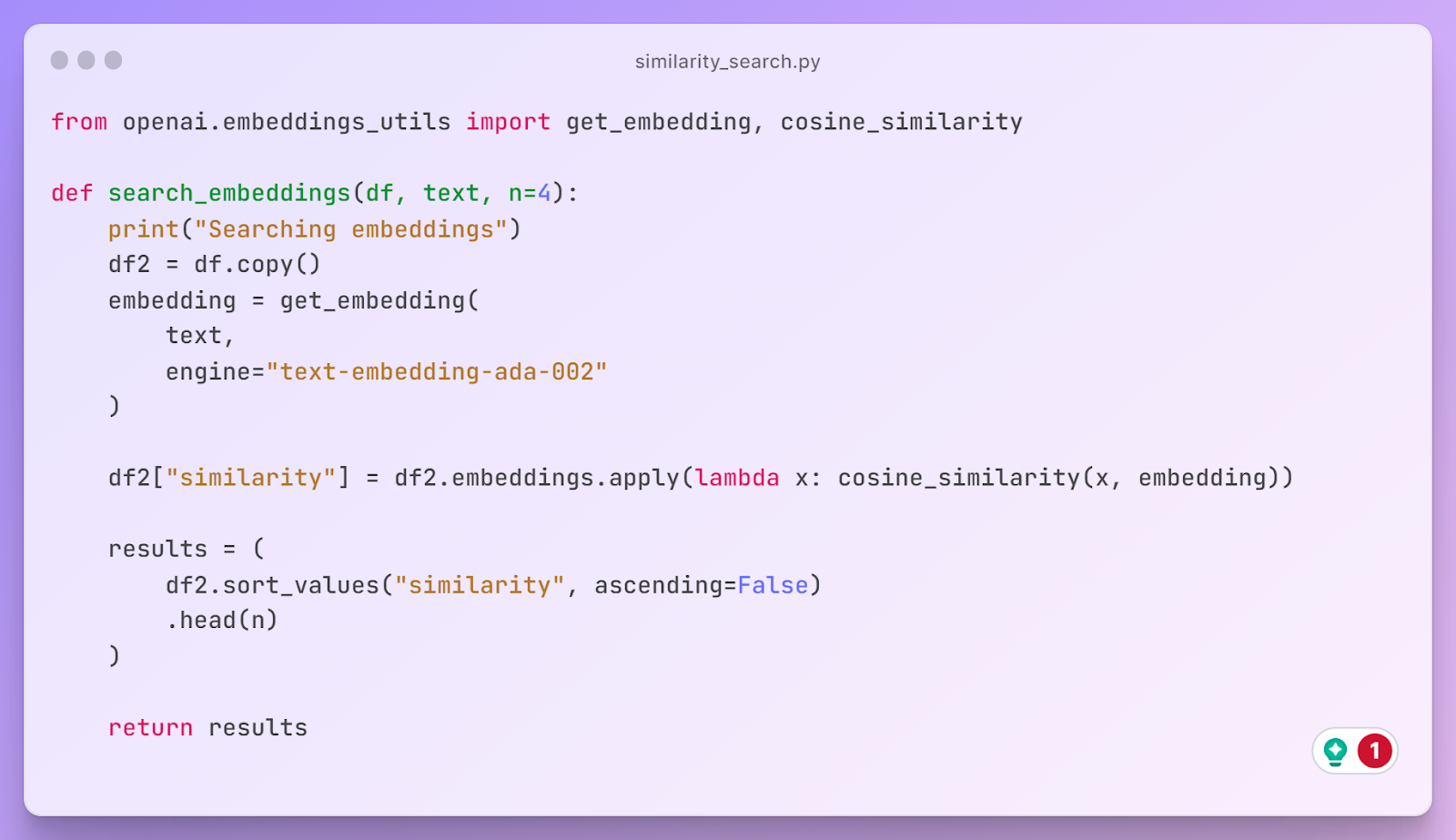

Once we have this, we can convert the query into embeddings and find the chunk embeddings which are closest to that. We will use cosine similarity for distance measuring.

Giving Data to GPT4

Now that we have obtained the relevant text chunks using embedding vectors, it's time to feed this data to GPT-4 for processing and generating responses to user queries. GPT-4 is capable of reasoning and understanding complex texts. This enhanced capability allows us to work with more complicated data and extract more comprehensive information.

To provide the data to GPT-4, we need to convert the closest embeddings back to the original text chunks.

Once we have obtained the text chunks, we can concatenate them into a larger context window and feed them to GPT-4, depending on the desired level of accuracy and desired information, we can reduce or increase the number of chunks given to the GPT.

It is important to note that GPT-4's advanced capabilities allow it to handle larger document sizes, enabling a more comprehensive analysis and understanding of the provided text. This enhanced capacity opens up possibilities for deeper insights and more detailed responses to complex queries.

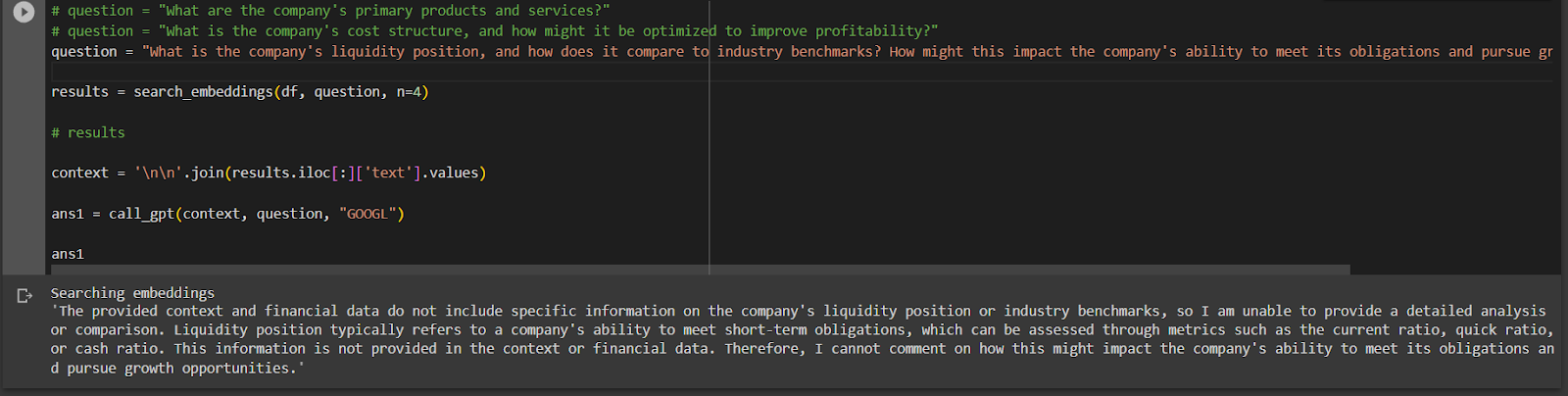

We use this code to fetch the relevant context and give it to the GPT model

Answering Queries

Once we have fetched the context and constructed a prompt using it, we are ready to answer the user query using GPT-4. To do this we will pass the prompt and the query to the model.

This is what the prompt looks like:

```

You are a finance expert. You will be given context from SEC filing Form-10K and will be asked to answer questions based on that.

You will also be given finance data of the company. Use finance data to answer and advise.

Only answer the question if it can be answered based on the context, say "I do not know" for anything else at all. This is very important.

Do not provide any extra advice unless asked to.

Analyze the context to answer properly.

Note: Give concise answers. Don't do any math calculations unless necessary. Be very analytical and in-depth.

Current date: <DATE>

Finance data:

<FINANCE DATA>

Context:

<CONTEXT>

```

The <DATE> is replaced by the current date, and <FINANCE DATA> is replaced by the latest company finance data fetched from Financial modeling API. And context is provided by us after a semantic search.

Using this, the model is able to answer queries based on the proper context, here are some examples.

Q1: What is the company's liquidity position, and how does it compare to industry benchmarks? How might this impact the company's ability to meet its obligations and pursue growth opportunities?

A: The provided context and financial data do not include specific information on the company's liquidity position or industry benchmarks, so I am unable to provide a detailed analysis or comparison. Liquidity position typically refers to a company's ability to meet short-term obligations, which can be assessed through metrics such as the current ratio, quick ratio, or cash ratio. This information is not provided in the context or financial data. Therefore, I cannot comment on how this might impact the company's ability to meet its obligations and pursue growth opportunities.

Q2: What are the company's primary products and services?

A: The company's primary products and services include:

1. Google Search: This includes revenues generated on Google search properties and other Google owned and operated properties like Gmail, Google Maps, and Google Play.

2. YouTube: This includes revenues generated on YouTube properties.

3. Google Network: This includes revenues generated on Google Network properties participating in AdMob, AdSense, and Google Ad Manager.

4. Google Cloud: This provides enterprise customers with infrastructure and platform services as well as communication and collaboration tools.

5. Android and Chrome: These are platforms that they continue to invest in.

6. Hardware: The company also invests heavily in hardware, including smartphones, home devices, and wearables.

7. Google Play and Google Photos: Platforms for discovery and creation of digital content.

8. AI Technology: The company uses AI in their products and services to understand and analyze data more efficiently.

9. Google Services: This includes ads, Android, Chrome, hardware, Gmail, Google Drive, Google Maps, Google Photos, Google Play, Search, and YouTube.

Q3: What is the company's cost structure, and how might it be optimized to improve profitability?

A: The company's cost structure is primarily made up of:

1. Cost of Revenues: This includes Traffic Acquisition Costs (TAC) and other costs of revenues such as data center costs, operations costs, and hardware costs. The total cost of revenues for 2022 was $126,203 million, an increase from $110,939 million in 2021.

2. Research and Development Expenses: These expenses, which increased from $31,562 million in 2021 to $39,500 million in 2022, are primarily driven by compensation expenses and third-party service fees.

3. Sales and Marketing Expenses: These expenses increased from $22,912 million in 2021 to $26,567 million in 2022, primarily due to an increase in compensation expenses and advertising and promotional activities.

4. General and Administrative Expenses: These expenses increased from $13,510 million in 2021 to $15,724 million in 2022, mainly due to an increase in compensation expenses and third-party services fees.

To optimize the cost structure and improve profitability, the company could consider the following strategies:

1. Optimize TAC: Since TAC makes up a significant portion of the cost of revenues, the company could seek to negotiate better terms with its distribution partners and Google Network partners.

2. Control R&D Expenses: While R&D is crucial for the company's innovation and growth, it's important to ensure that these investments are generating a good return. The company could consider optimizing its R&D spending by focusing on projects with the highest potential for profitable returns.

3. Streamline Sales and Marketing: The company could look for ways to make its sales and marketing efforts more efficient, such as by leveraging data analytics to target its advertising and promotional activities more effectively.

4. Manage General and Administrative Expenses: The company could look for ways to streamline its administrative functions, such as by automating certain tasks or renegotiating contracts with third-party service providers.

5. Workforce Optimization: As mentioned in the subsequent event, the company is planning a reduction of its workforce by approximately 12,000 roles which is expected to incur severance and related charges of $1.9 billion to $2.3 billion. This could potentially lead to cost savings in the long run.

Note: Any cost optimization strategies should be carefully considered to ensure they do not negatively impact the company's operations or future growth potential.

Want to build Finance AI products?

If you want to integrate AI pipelines into your finance products, reach out to us. We have built multiple finance-focused projects over the years and have integrated AI into them. We can train privacy-focused LLMs, build data analytics pipelines, and much more.

Integrating AI will solve the bottlenecks for you. You might doubt security or privacy. Don't worry, get on a Free call with our CEO Rohan, to clear your doubt.

.png)

.png)