For over two decades, search engines have been the primary tool for online information discovery. However, with the Internet's rapid growth and the massive increase in available data, these engines are struggling to efficiently deliver relevant and useful results to users.

While traditional search engines are unlikely to become obsolete, their role is expected to evolve. They might primarily focus on "crawling" and "ranking" web content, complementing LLMs in sifting through and contextualizing data based on user queries.

LLMs are really good at lots of tasks involving language. But, they don't do as well in finding specific information because the things they're looking for don't come up a lot in normal language. Even when we give LLMs clear instructions, they still struggle with these tasks. So, they're not as helpful in this area as we'd like.

INTERS (INstruction Tuning datasEt foR Search) addresses this issue. It's a comprehensive dataset that covers 21 tasks within three core IR areas

- Understanding queries

- Understanding documents

- Grasping the relationship between queries and documents.

This dataset is special because it combines 43 different datasets and uses hand-made templates. With the addition of INTERS, the abilities of some popular LLMs like LLaMA, Mistral, and Phi have improved, especially in finding and getting information.

So why do we need this again? What’s the big deal anyways.

Large Language Models that are fine-tuned with instructions can learn new tasks they've never done before. But, using this method for information retrieval (IR) tasks has been tough because there weren't good datasets for this.

Here's what's been done to solve this problem:

- INTERS focuses on three key parts of search: understanding what the search is about, getting what documents are saying, and figuring out how searches and documents are connected.

- This dataset has 43 different datasets in it and covers 20 kinds of search tasks.

- For each task, there's a special description and 12 unique ways to use it. This helps create good examples for learning without much data (zero-shot and few-shot examples).

The researchers tested INTERS Using it to fine-tune open LLMs that anyone can use. They saw the LLMs get better at different search tasks. This wasn't just for things they learned during training but also for new tasks they hadn't seen before (out-of-domain tasks). They looked into how using different instructions and amounts of data changed how well the LLMs could learn and do new things.

Why this matters:

- The team grouped search tasks into three types: understanding the query, understanding documents, and understanding how queries and documents relate.

- This way of grouping helps train models better.

INTERS is a big deal because it's made just for search tasks. It has 20 search tasks and uses data from 43 popular datasets. It's rich and diverse because it has manually written templates and descriptions for each task.

Instruction tuning for Large Language Models

Instruction tuning is a powerful technique that enhances the performance of LLMs on various search tasks. Basically, it's a method of fine-tuning these models using straightforward language instructions, making it relatively easy for the models to learn and adapt. While it shares similarities with other training methodologies, instruction tuning sets itself apart by emphasizing learning from instructions.

The beauty of instruction tuning lies in its dual impact. It not only enhances the performance of LLMs on tasks they're directly trained for but also equips them with the ability to tackle new, unseen tasks. This dual benefit is a significant advancement in the field of language models and search technology.

For instance, imagine a language model trained to understand and generate responses for queries related to weather forecasts. With instruction tuning, this model could not only become better at providing accurate weather information but also gain the ability to answer queries related to a completely new domain, say, stock market trends, which it was never trained on. This capacity to handle out-of-domain tasks is a game-changer and greatly expands the potential applications of LLMs.

This research is all about how a technique called instruction tuning can be super useful when it comes to search tasks. It allows LLMs to handle not just the tasks they're trained on, but also ones they've never seen before.

Search tasks, which are the main focus of this research, are a bit different from the usual language tasks. They're all about queries (the questions or terms you're searching for) and documents (the information you're pulling from). The researchers broke these tasks down into three parts: understanding what the query is asking for, understanding what the documents are saying, and understanding how queries and documents relate to each other.

To help LLMs get better at these tasks, the researchers picked out the right datasets for each category and even created templates by hand, resulting in something they call the INTERS dataset.

In a nutshell, instruction tuning is a pretty big deal in the world of AI and search technology. It helps LLMs to understand and respond to search-related instructions more effectively, opening up possibilities for them to be used in more complex search scenarios. This is a big step forward for language models and could change the way we use AI to get better and more accurate search results.

Instruction tuning for Search

So, what's cool about this method is that it lets LLMs take care of tasks they're trained for, and even those they've never come across before. The research really hones in on search tasks, which are pretty different from your usual NLP tasks in terms of what they're aiming for and how they're structured.

When we're talking about search tasks here, we're really looking at two big parts: queries and documents. The research breaks tasks down into three areas:

- Getting what the query means, understanding the document, and getting how the query and document relate to each other. These are super important for making LLMs better at interpreting queries,

- Getting what documents are saying, and

- Understanding how they’re connected. The research involves choosing datasets and making templates for each one by hand, which leads to the birth of the INTERS dataset.

Examining the Tasks and Datasets

Query Understanding

Query understanding involves interpreting user-initiated requests for information, usually consisting of keywords or questions. The aim is to improve LLMs' ability to understand the semantics of queries and capture user search intent. The tasks include:

- Query Description: Describing documents relevant to user-provided queries, using datasets like GOV2, TREC-Robust, TREC-COVID, and FIRE.

- Query Expansion: Elaborating an original query into a more detailed version, using datasets like GOV2, TREC-Robust, and others.

- Query Reformulation: Refining user-input queries for better search engine understanding, using datasets like CODEC, QReCC, and CANARD.

- Query Intent Classification: Identifying the intent behind a query, using datasets like ORCAS-I, MANTIS, and TREC-Web.

- Query Clarification: Clarifying unclear or ambiguous queries, using datasets like MIMICS, MIMICS-Duo, and ClariQ-FKw.

- Query Matching: Determining if different queries convey the same meaning, using the MSRP dataset.

- Query Subtopic Generation: Identifying various aspects of the initial query, using TREC-Web.

- Query Suggestion: Predicting the next likely query in a search session, using the AOL dataset.

Document Understanding

Document understanding is about how an IR system interprets and comprehends the content and context of documents. The tasks include:

- Fact Verification: Assessing if a claim is supported by evidence, using datasets like FEVER, Climate-FEVER, and SciFact.

- Summarization: Creating concise summaries of documents, using datasets like CNN/DM, WikiSum, and Multi-News.

- Reading Comprehension: Generating answers from given contexts, using datasets like SQuAD, HotpotQA, and MS MARCO.

- Conversational Question-Answering: Responding to a series of interrelated questions based on context, using datasets like CoQA and QuAC.

Query-Document Relationship Understanding

This involves determining the match or satisfaction of a user’s query with a document. The primary task is document reranking, using datasets like MS MARCO, the BEIR benchmark, and HotpotQA.

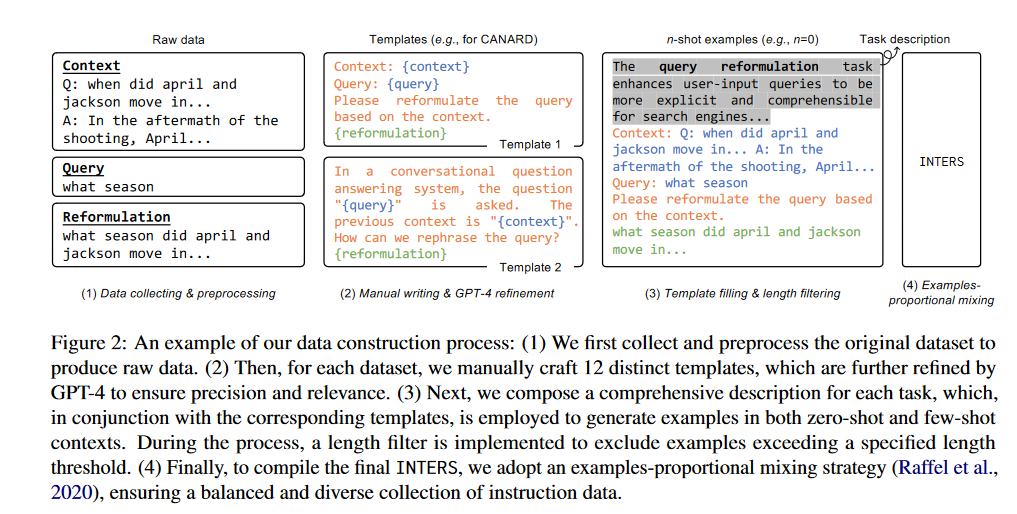

Construction of INTERS

Creating the INTERS dataset helps make big language models better at search tasks. This important process has four main steps. The study explains how this process makes sure LLMs can handle the tough job of finding information.

Step-by-Step Construction of INTERS

1. Preprocessing

The first step involves downloading all the datasets from publicly available resources. This stage is crucial for filtering out unnecessary attributes and invalid data samples. Once this is done, the datasets are converted into the JSONL format, setting the stage for further processing.

2. Template Collection

Inspired by the design of FLAN, the researchers craft 12 distinct templates for each dataset. These templates, written in natural language, describe the specific task associated with each dataset. To boost the diversity of the templates, up to two "inverse" templates are integrated per dataset. For instance, in the query expansion task, templates prompting for simplifying a query are included. Additionally, detailed descriptions for each task are provided. These descriptions not only offer a deeper understanding of the task’s objectives but also establish a connection among datasets under the same task category.

3. Example Generation

Each data sample uses a task description and a randomly chosen template to create n-shot examples (n is between 0 and 5 in the experiments). We also make few-shot examples, picked randomly from the training set and separated by special tokens. We improve the variety of training data by using the same and randomly chosen templates for few-shot examples. We apply a length filter to make sure examples are within the learning range of LLMs and leave out any that are too long.

4. Example Mixture

Finally, to compile INTERS, examples are randomly selected from the entire collection until a total of 200,000 examples is reached. To maintain balance among the different sizes of datasets, a limit of 10,000 training examples per dataset is set. A mixing strategy is applied to prevent larger datasets from having a dominant influence.

Sooo what gives?

Key Observations from Fine-Tuning with INTERS

Superiority of Larger Models

It's often seen that bigger models work better than smaller ones. For instance, LLaMA-7B and Mistral-7B perform better than smaller models like Minima-3B and Falcon-1B. Interestingly, in tasks like rewriting queries and summarizing, bigger models without any special tuning (like LLaMA-7B-Chat) can do better than smaller models that have been specially tuned with INTERS (like INTERS-Falcon on GECOR).

Fine-Tuning Smaller Models for Specific Tasks

A fascinating discovery is that the smaller Minima model, when fine-tuned with INTERS, performs better than larger models in tasks like query suggestion, even though it has fewer parameters (3B vs. 7B). This implies that refining smaller models can be a smart and cost-saving approach for certain tasks, making it a good choice when resources are limited.

Pre-Fine-Tuning Advantages

Before adjustments, the LLaMA-Chat model, built for dialogues, performed better than the basic LLaMA model. This is because LLaMA-Chat is better at following instructions and doing tasks. But, after tuning with INTERS, the performance difference between the models gets smaller. This shows that tuning can help models not made for specific situations to perform just as well.

The Impact of Templates on LLMs

Comparing Performance: With and Without Templates

To understand the role of these templates, researchers compared the performance of models trained with and without them. In the 'no template' setup, keywords were retained to indicate different parts of the input, while the full template setup included detailed instructional templates. An example provided in the study illustrates this difference, where the 'no template' version only keeps basic cues like “Context: ... Query: ...” as the input.

Using INTERS Instructions During Zero-Shot Test

In alignment with the FLAN approach, the study utilizes INTERS instructions during zero-shot testing. This is crucial, especially in the 'no template' setup, as without templates, the model may not clearly understand the task it needs to perform. This approach provides a more controlled environment to assess the true impact of the templates.

Results and Future Work

The results, as shown in Figure 5 of the study, are telling. The ablation configuration, which lacked the instructional templates, yielded inferior results compared to the full INTERS setup. This indicates the significance of instructional templates in task learning for LLMs. It's a crucial finding that underscores the need for well-designed templates in instruction tuning.

Exploring the Impact of Data Volume

Experiments with Reduced Data Volumes

The researchers conducted experiments using only 25% and 50% of the data sampled from INTERS for fine-tuning. This approach allowed for a controlled observation of how reduced data volumes affect model performance.

Task-Specific Data Volume Effects

In an interesting twist, the study also investigates the effects of increasing data volume for specific tasks. By doubling the data for tasks in the query-document relationship understanding group, they were able to analyze the differential impact of data volume on various tasks.

Key Findings from the Study

General Enhancement with Increased Data

As depicted in Figure 7 of the study, the results show a clear trend: increasing the volume of instructional data generally boosts model performance. This finding underscores the fundamental principle that more data can lead to better learning and performance for LLMs.

Variability Across Tasks

However, an intriguing observation is that the sensitivity to data volume varies across different tasks. For instance, the query reformulation task shows consistent performance across different data volumes. This might be because query reformulation often involves straightforward modifications, which LLMs can learn relatively easily.

On the contrary, tasks like reranking benefit significantly from increased data volumes. More data in reranking tasks leads to noticeable improvements in performance. Yet, this increase in data volume doesn't have the same positive impact on other tasks like summarization, as observed in the XSum dataset.

Implications and Future Directions

So here's the lowdown - training LLMs is a tricky business. Not every task or process in the training routine gets affected in the same way or to the same extent by the amount of training data.

It's a bit of an eye-opener in the machine learning world, specifically when it comes to training these big language models. Sure, piling on more data for training these models is generally a good move, but it's not a magic fix for everything.

The specifics of each task, whether it's a simple classification or some complex reasoning, really matter. They play a big part in how this data impacts learning and how well the model performs. So, it seems like we need to get a bit more custom with how we handle data volume, based on the task we're dealing with, to get the most out of the learning process and model performance.

What’s in Part-2

INTERS is set to launch on the 15th of February. In conjunction with this release, we will provide a comprehensive guide on how to optimize and enhance the process of information retrieval. This guide will focus specifically on the LLAMA v2 model. Our target is to equip you with the necessary knowledge and tools to make the most out of this powerful model, driving improved performance and results. Stay tuned for our detailed breakdown, which will take you step-by-step through the fine-tuning process.

.png)