In the dynamic AI field, success requires understanding of theories, algorithms, and the RLHF optimization method. It's crucial to connect LLM performance, responsiveness, and user intent with human feedback. This enhances traditional fine-tuning methods. This goes beyond grammar and syntax to comprehend human values. The hands-on approach provides readers with a deeper understanding of RLHF. It allows them to experiment with language models based on human feedback. This demonstrates theoretical concepts and promotes AI innovation.

This article conducts a comparative analysis of two notable optimization techniques: Proximal Policy Optimization (PPO) and Direct Preference Optimization (DPO). PPO enhances reinforcement learning (RL) training. It does this through an iterative policy improvement process with a clipped surrogate objective. DPO simplifies Reinforcement Learning (RL). It merges optimal policies and reward functions into a single training stage. This demonstrates both stability and simplicity. Each method tackles unique challenges in reinforcement learning. This lays a solid foundation for a more in-depth understanding and application of these techniques. Ensure you have read the first part of the article.

Setting Up the Coding Environment

Step 1: Choose Your Deep Learning Framework

TensorFlow

Installation: Install TensorFlow, ideally the latest version, to ensure compatibility with most models and libraries. Use pip for installation: For GPU support (if you have a compatible NVIDIA GPU), use:

Installation: Install PyTorch by selecting the appropriate version for your system from the PyTorch website. A typical installation command looks like:

Ensure you have the correct CUDA version if you're planning to use a GPU.

Step 2: Set Up Hugging Face's Transformers Library

The Transformers library provides a wide range of pre-trained models, including GPT-2 and GPT-3. These models are essential for NLP tasks.

Installation

Usage: Import the library and load your model of choice. For GPT-2, for example:

Step 3: Verifying that the computational requirements are satisfied

An enormous amount of computing power is needed to train and fine-tune LLMs, especially models like GPT-3. Make sure that your machine can handle the job:

GPU Requirements: A powerful NVIDIA GPU (like the Tesla V100 or RTX 3080) is highly recommended for efficient processing. It is important to make sure that the TensorFlow or PyTorch installation is compatible with your GPU.

Memory Requirements: LLMs are memory intensive. Make sure that your computer has enough RAM and GPU memory to run the models. To work with larger models, at least 32 GB of RAM and a GPU with 12 GB of VRAM is required.

Storage: Enough space to store information, model checkpoints, and logs. There is no better choice than SSDs because they can load and save data more quickly.

Lastly, make sure you have a robust development environment configured for Python development, such as Visual Studio Code or PyCharm. These environments offer beneficial features like code completion and debugging tools. These tools are invaluable for complex projects like RLHF with LLMs.

By meticulously following these steps, you lay a strong foundation for RLHF projects. You explore LLM capabilities while efficiently tracking and improving experiments.

Implementing PPO

Our pursuit of enhanced AI capabilities drives our research into PPO-RLHF integration. This method makes the text closely resemble humans and match human values and purposes. Our analysis examines how to integrate PPO into the RLHF framework. Referring OpenAI's 2019 RLHF codebase performance goals.

.png)

Model Selection

Start by loading your LLM using Hugging Face’s Transformers library.

The reference research paper is Proximal Policy Optimization Algorithms by researchers of OpenAI. We can also refer to this Cartpole PPO implementation by Keras official documentation.

PPO Integration

Implement the PPO algorithm, focusing on the iterative policy improvement process. The algorithm adjusts the model's parameters to maximize expected rewards. It is constrained by a clipped surrogate objective function to maintain training stability.

Libraries required

Pseudocode for PPO integration

The PPO algorithm is implemented with a focus on the iterative policy improvement process. The algorithm adjusts the model's parameters to maximize expected rewards. It uses a clipped surrogate objective function to maintain training stability.

Training the reward model

Training the reward model involves iterating through the reward data loader. It also involves making predictions, calculating loss, and updating the model parameters.

Evaluating PPO

The evaluation function estimates the values of each observation and the log probabilities of each action in the most recent batch using the actor and critic networks.

Implementing PPO within RLHF requires a detailed approach. The process starts with loading the Language Model. It is steered by insights from a selected research paper and existing implementations, providing a solid starting point. PPO integration involves a well-developed code. It highlights the iterative process for continuous policy improvement. It uses a clipped objective function to improve training stability. Training the reward model is essential. This includes tasks like loading data, making predictions, calculating loss, and optimizing model parameters. Evaluation is key. The critic network provides values. The actor network, using the MultivariateNormal distribution, calculates log probabilities. Aiming to bridge the gap between AI capabilities and the creation of human-like text. It does this by combining theoretical understanding with practical coding.

Implementing DPO

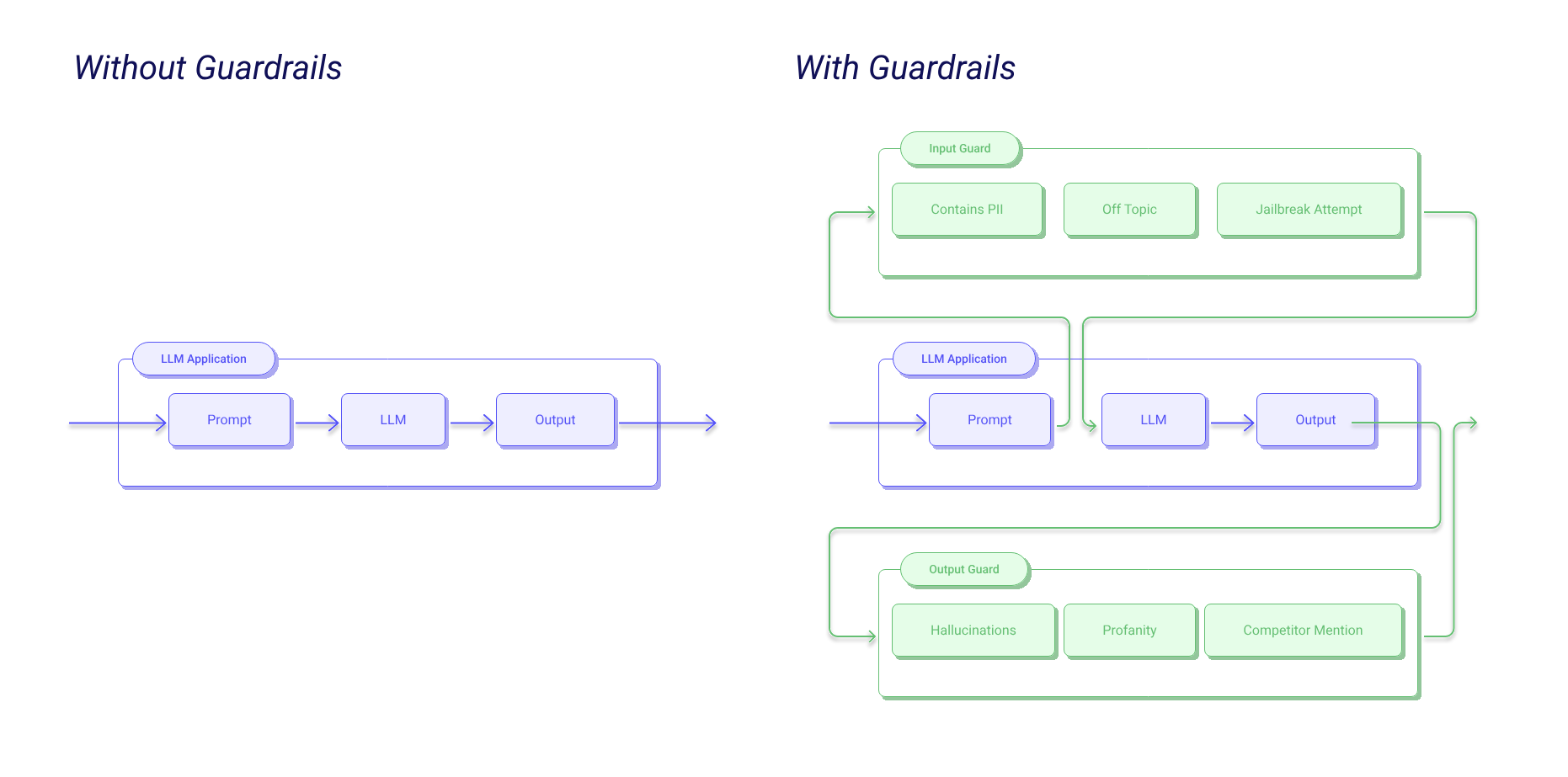

The DPO pipeline comprises two primary stages: Supervised Fine-tuning (SFT) and Preference Learning. DPO's simplicity shines by directly defining preference loss as a function of the policy, eliminating the need to train a reward model beforehand.

1. Model Selection

Begin by loading your LM using Hugging Face’s Transformers library. The choice of LM can significantly impact the performance of DPO, and it's essential to align it with your specific use case.

2. Supervised Fine-tuning (SFT)

The first stage involves fine-tuning the LM on a dataset(s) of interest, commonly referred to as Supervised Fine-tuning (SFT). This step provides the model with initial knowledge and context relevant to the desired outcomes.

3. Preference Learning

DPO introduces preference learning using preference data, which ideally comes from the same distribution as the examples used in SFT. This stage mirrors a scientist's approach, relying on direct preferences rather than an intermediate reward model.

Pseudocode for DPO implementation

In this pseudocode, the DPO implementation iterates over batches of preference data. The compute_preference_loss() function calculates the loss based on the direct preferences provided. The optimizer then adjusts the model's parameters to minimize this loss.

Understanding Preference Data

Why Preference Data Matters:

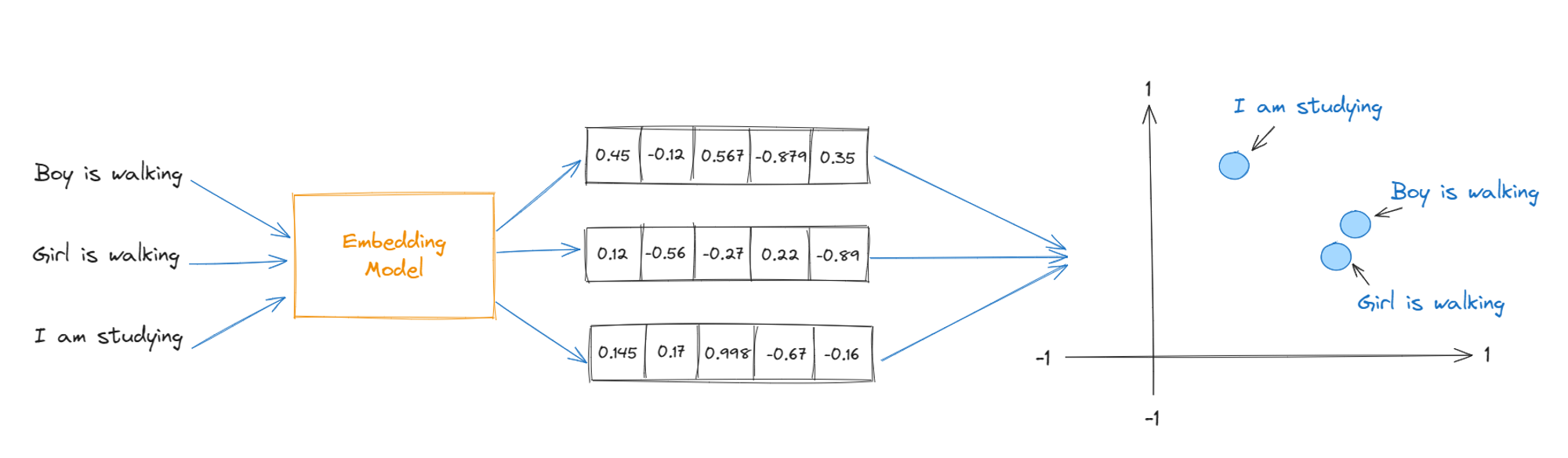

Preference data in Natural Language Processing (NLP) involves a curated collection of options ranked by annotators. This valuable dataset provides insights into human preferences. It enables the refinement of language models to generate outputs that better align with human expectations.

Annotator Rankings

Annotators are often domain experts or individuals familiar with the context of the task. They rank different model-generated responses based on their preferences. For instance, if the task is generating responses to customer queries, annotators might rank responses based on factors like clarity, politeness, and informativeness.

Utilizing Preference Data for Model Refinement

Once collected, preference data becomes a powerful tool for refining language models. Models can be fine-tuned to generate outputs that align more closely with the preferences expressed in the dataset. This process goes beyond traditional supervised learning. It allows models to capture the subtle nuances that make certain responses more desirable to humans.

During the training phase, preference data guides the model to create responses that are contextually appropriate and favored by humans. This requires modifying the model's parameters. We assign higher probabilities to sequences aligning with the dataset preferences. Using preference data in NLP tasks brings us closer to developing language models that excel in their tasks. The models also cater to the unique preferences of the users and produce responses that are both user-friendly and contextually fitting.

Code Implementation for DPO

While actual code depends on the LM architecture and the deep learning framework, a simplified snippet could look like this using PyTorch:

The elegance of DPO is found in its straightforwardness. DPO takes a different route. Instead of training a reward model and then optimizing a policy based on it, DPO directly defines the preference loss as a function of the policy. There is no requirement to train a reward model beforehand.

During the fine-tuning phase, DPO utilizes the LLM as a reward model. The policy is optimized using a binary cross-entropy objective. It relies on human preference data to determine the preferred responses and those that are not. By carefully analyzing the model's responses and preferred outcomes, we make adjustments to the policy. Thus, improving its performance.

Comparative Analysis of PPO and DPO

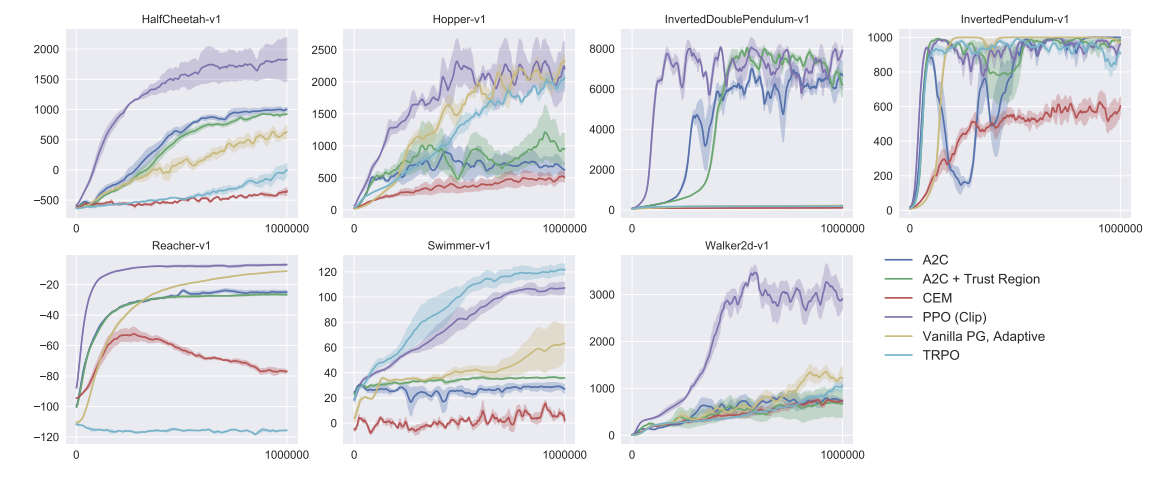

In situations with large, continuous action spaces, like robotics and complex game environments, PPO has been shown to work very well. This is because it works in steps and uses a clipped surrogate objective function. It's perfect for tasks where even small changes to the policy can make a big difference in how well they work. Furthermore, PPO's sample efficiency is improved by its ability to perform multiple epochs of stochastic gradient descent on collected data. This makes it especially useful when gathering data is hard to do or costs a lot of money.

Whereas, DPO makes reinforcement learning easier by optimizing the policy directly based on human preferences. So there is no need for a reward model in the middle. This means that the policy is shaped by the preferences that human annotators have shown for pairs of scenarios or actions that have been collected. DPO is easy to use because it takes a direct approach to learning from human feedback, which can be more natural and in line with human values. DPO is especially helpful when it's hard to define a clear reward function or when human judgment is very important. Such as when natural language processing tasks like text generation or conversation modeling are at stake. This is where human preferences can help the model come up with more natural, interesting, or appropriate responses. The method's direct approach can speed up convergence on tasks where people's preferences make it clear which way to optimize. However, the need for a lot of data to be labeled by humans can make it less scalable and useful.

Performance Metrics for Comparison

- Stability and Robustness:

PPO's clipped objective function contributes to its stability during training, making it robust to hyperparameter settings. DPO, while straightforward, may require careful calibration of the preference data to ensure the model does not overfit to specific human biases or preferences. - Sample Efficiency:

PPO is generally more sample efficient than traditional reinforcement learning methods because it reuses collected data for multiple gradient updates. DPO's efficiency largely depends on the quality and quantity of preference data available; insufficient or biased preference data can hinder its effectiveness. - Applicability to Different Domains:

PPO's versatility makes it suitable for a wide range of applications, from control tasks to complex decision-making environments. DPO, on the other hand, excels in domains where human judgment is paramount and where the reward function is difficult to specify programmatically. - Ease of Implementation:

Both methods have their complexities; PPO requires careful tuning of the clipping parameter and understanding of policy gradient methods, while DPO demands a robust system for collecting and processing human preferences.

Handling Reward Model Training Across Techniques

The choice of method, such as PPO or DPO, can depend on task complexity. PPO might be preferred for tasks with well-defined reward structures and the need for fine-grained policy adjustments. In contrast, DPO could be more direct and potentially more effective for tasks where human judgment is essential and the reward function is ambiguous. Human-in-the-loop considerations are also important. DPO inherently requires human feedback, which can be both a strength and a limitation. The quality and scalability of collecting feedback are critical considerations. PPO, while less dependent on human feedback, may benefit from human-in-the-loop approaches during initial reward function design or for periodic adjustments based on performance.

Addressing Bias and Code Evaluation

Strategies for Diverse Evaluator Selection

Effectively addressing bias requires selecting evaluators with diverse perspectives. Evaluators should come from various demographic backgrounds, cultural contexts, and areas of expertise. This ensures a thorough assessment of the language model and reduces the risk of favoring certain groups or perpetuating biases. By including linguists, AI researchers, diversity experts, domain specialists, and end-users, a more nuanced evaluation can be achieved.

Techniques for Validation and Feedback Calibration

Validation and feedback calibration are crucial for ensuring the dependability of evaluation metrics. Cross-validation, a method that tests the model on various data subsets, helps determine its overall performance. Calibration techniques are used to modify evaluator feedback, taking personal biases into account and promoting a just and uniform evaluation process. These steps are essential in gaining reliable insights into the model's behavior across a broad array of inputs.

Conclusion and Future Directions

In conclusion, the analysis of PPO and DPO underscores their unique strengths and weaknesses. PPO shines in refining policies through iterations, while DPO enhances training via preference learning. Deep comprehension of these differences is crucial when choosing the best approach for certain use cases.

The future of RLHF techniques seems promising, providing new research avenues for better language models. Hybrid methods combining PPO and DPO strengths could potentially lead to more resilient and adaptable models. Furthermore, applying RLHF to specialized fields like healthcare or legal text comprehension offers compelling possibilities. Continuous research and innovation in RLHF are essential for advancing language model capabilities. And ensuring their safe application in real-world scenarios.