What are Transformers Models?

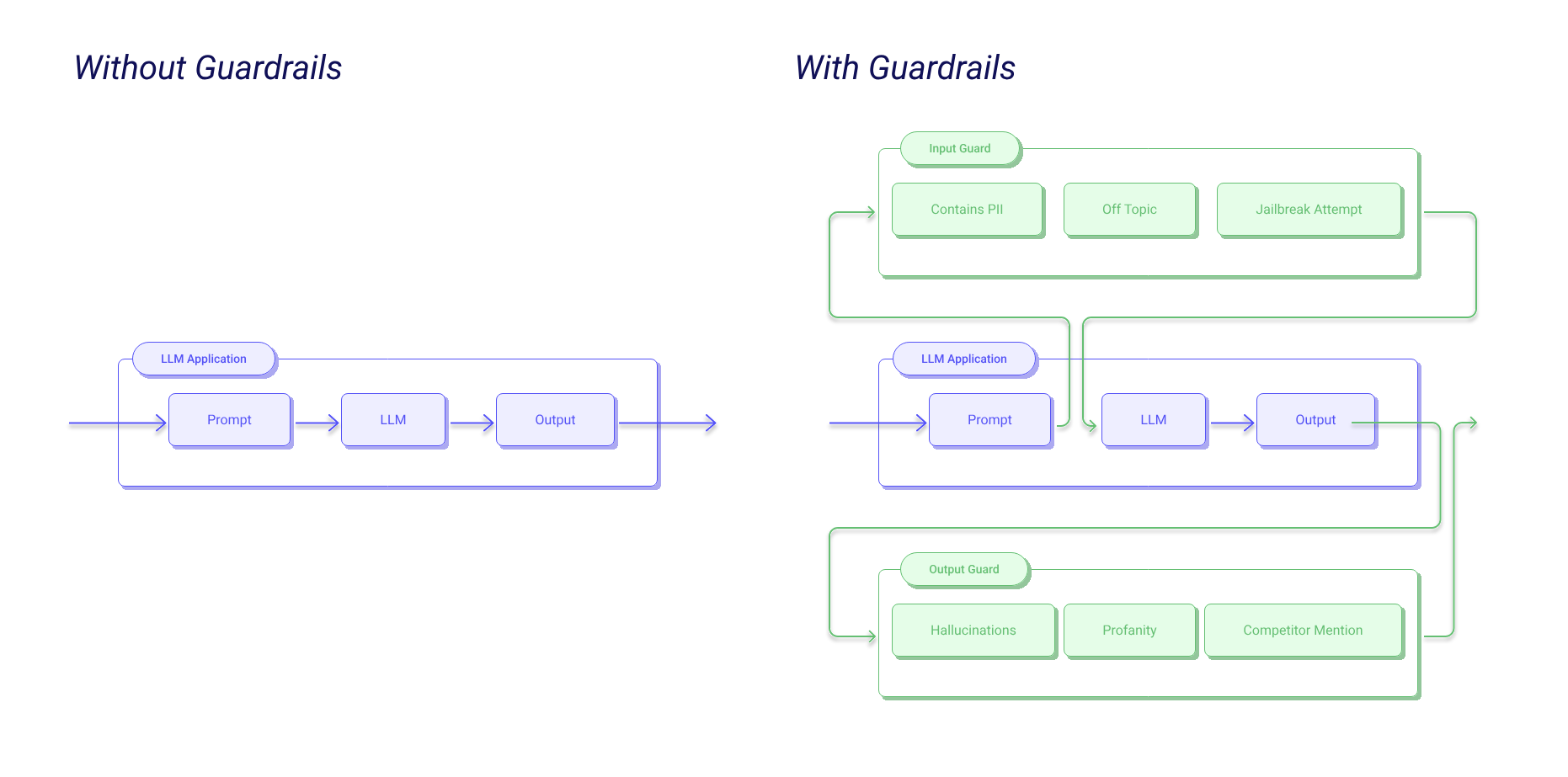

The Transformer, a sequence transduction model, was first showcased by Vaswani et al. in their groundbreaking research paper "Attention is All You Need." This model deviates from conventional sequence-to-sequence models which typically hinge on recurrent or convolutional neural networks. The Transformer relies exclusively on attention mechanisms, completely forgoing the use of recurrence and convolution. Given its unique architecture, it's especially competent for tasks such as machine translation, in which it has exhibited exemplary performance concerning both quality and training efficiency.

So, traditional sequence-to-sequence models (think ones using recurrent neural networks or RNNs) have been a popular choice for things like language modeling and machine translation. But one downside? They're a bit slow due to their sequential computation, which makes training a bit of a time hog. Enter the Transformer! This clever alternative uses self-attention mechanisms, ditching the need for recurrent connections. This means it's not held back by sequential nature, making it better at parallelization and speeding up training time - pretty neat, huh? When you compare the Transformer with other models like the Extended Neural GPU, ByteNet, and ConvS2S, you'll see the Transformer really shines, boasting reduced computational complexity and an improved knack for capturing long-range dependencies.

The Transformer Architecture

Encoder and Decoder Stacks

The Transformer architecture consists of encoder and decoder stacks. The encoder is composed of stacked identical layers, each containing multi-head self-attention and a fully connected feed-forward network. The decoder has similar layers but includes additional attention mechanisms for attending over the encoder's output.

Why are Attention Mechanisms so efficient?

The attention mechanism utilized in the Transformer model, known as the "Scaled Dot-Product Attention," operates by computing the dot products of queries and keys. It's a cool process where the dot products of queries and keys are calculated. But it's not doing this all by itself! It's actually part of a bigger process. Once the dot products are figured out, they are scaled down to keep the values manageable. After the scaling, a softmax function is applied, letting the model assign different levels of importance to various parts of the input.

In addition to the Scaled Dot-Product Attention, the Transformer model also employs a mechanism known as Multi-Head Attention. This feature allows the model to attend to information from different representation subspaces simultaneously. By doing this, the model can process and synthesize information from various sources, thereby greatly enhancing its capabilities. The Multi-Head Attention mechanism is a critical aspect of the Transformer model as it allows the model to better understand and interpret the complex relationships and patterns within the data it is processing.

.png)

Beyond Attention: Feed-Forward Networks and Positional Encoding

Each layer in the encoder and decoder of the transformer model contains a position-wise feed-forward network. This network applies linear transformations with ReLU activations to each position separately. Learned embeddings are used to convert input and output tokens to vectors of a specific dimension. Shared weight matrices are utilized to connect the embedding layers and the pre-softmax linear transformation.

Since the model lacks recurrence or convolution, positional encodings are added to the input embeddings to provide information about the order of tokens in the sequence. The original research paper introduces sinusoidal positional encodings based on sine and cosine functions. In the transformer model, each encoder and decoder layer also contains a position-wise feed-forward network that performs transformations on the data. To retain the sequence order information, positional encodings are added to the input embeddings due to the lack of recurrence or convolution in the Transformers.

The Importance of Paying Attention

Let's discuss about attention and self-attention mechanisms, the real superstars of transformer models! They've seriously upped the game in the world of natural language processing. What they do is pretty cool - they let the model zoom in on different bits of an input or output sequence, which really jazzes up tasks like language translation and text summarization.

Now in encoder-decoder set-ups, attention mechanisms help the decoder zero in on specific parts of the encoded input sequence, and that's a real game changer when it comes to generating accurate predictions. This is a big leap forward from older models like LSTMs, which had a bit of a bottleneck because they were stuck with a fixed-size summary vector. But with attention, the model can tap into information from any part of the input sequence, which really boosts its ability to suss out relationships between words or phrases.

And then we have self-attention, which is a special kind of attention that lets a model focus on different parts of the input sequence while it's encoding. It's not like traditional attention, which hones in on a separate sequence. Instead, self-attention is all about the sequence being encoded. This gets rid of the need to cram all the important stuff into a fixed set of vectors, which was a bit of a snag with recurrent models. With self-attention, each input element gets encoded with the whole sequence context in mind, so nothing gets left out. This knack for keeping hold of information makes self-attention a powerful tool in transformer models, allowing them to ace various tasks with impressive accuracy and efficiency.

Examining Self-Attention

Self-attention is a game-changer, especially for tasks involving shorter sequences, as it's highly efficient in terms of computational complexity, parallelization, and maximum path length between positions. Picture training like preparing for a marathon, where you're selecting the right gear (hardware specifications), setting a pace (learning rate schedules), and taking care of your health (applying regularization techniques like dropout and label smoothing). Now, these Transformers are not just showing up at the race, they're smashing records! They've outdone previous models in machine translation tasks and achieved top-notch results on English-to-German and English-to-French datasets. They've even outperformed ensembles from other architectures, and that too at a fraction of the training cost!

Their secret? The "Scaled Dot-Product Attention," which is like a super-powered magnifying glass, helping the model to focus dynamically on different parts of the input sequence as it processes information. And let's not forget about Multi-Head Attention, another cool feature that allows the Transformer to attend to different parts of the sequence at once, capturing various features and dependencies in the process. Think of it as a team of detectives, each focusing on different clues, and then combining their insights for a more nuanced understanding of the case. And that's the magic of self-attention in Transformers!

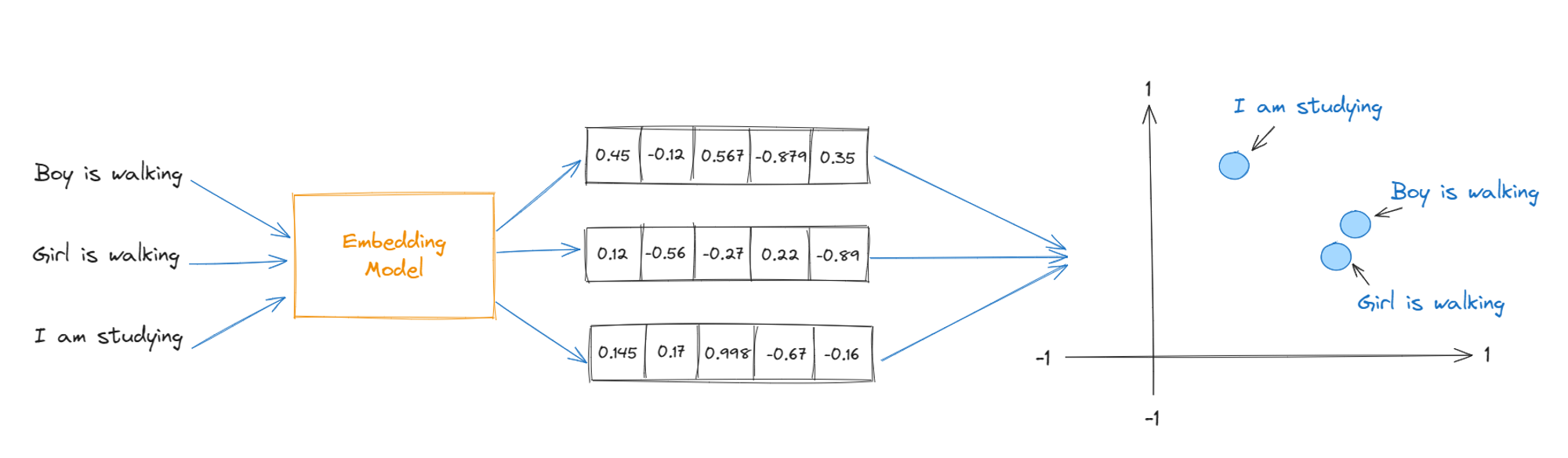

Scaled Dot Product attention

The scaled dot-product attention is a mechanism that Transformers use to weigh the significance of different parts of the input data. It computes attention scores based on the query (Q), key (K), and value (V) matrices derived from the input. The attention function is computed as a dot product of Q and K, scaled by the square root of the key's dimensionality, followed by a softmax function to obtain the weights on the values.Illustration: Imagine a set of arrows (Q) looking for matching directions in another set (K). When they align well, they produce a stronger signal (dot product). This signal is 'scaled' to avoid extremely large values that could affect the softmax distribution. Each Q arrow, now with a set of weights, collects information (V) based on these weights.

.png)

Defining the weight matrices

In Transformers, weight matrices are trainable parameters that are learned during the training process. For each input token, we have different weight matrices for queries (W^Q), keys (W^K), and values (W^V) in the attention mechanism, and an additional weight matrix for the output (W^O).

.png)

Unnormalized attention weights

Before normalization, attention weights are raw scores obtained by multiplying the query matrix with the key matrix transpose. These scores are unnormalized because they have not yet been scaled or passed through a softmax function.

.png)

Computing the attention scores

The attention scores are computed by scaling the dot product of Q and K with the inverse square root of the dimension of the keys, which helps in stabilizing the gradients during training. After scaling, a softmax function is applied to obtain a probability distribution.

.png)

Multi-head attention

Multi-head attention allows the model to jointly attend to information from different representation subspaces at different positions. Instead of one set of Q, K, and V, the Transformer uses multiple sets, allowing it to capture various aspects of the input data in parallel.

Illustration: Consider this as a panel of experts (heads) each focusing on different features of the same problem, then combining their insights to make a final decision.

.png)

Transformers and their Applications

Transformers are a class of models that utilize attention mechanisms to capture global dependencies in the data. They have been highly successful in various applications, most notably in natural language processing tasks such as translation, text summarization, and sentiment analysis.

Illustration for Applications:

- Translation: Picture a Transformer as a polyglot interpreter in a United Nations meeting, translating speeches into multiple languages simultaneously.

- Text Summarization: Envision a librarian who can swiftly read a book and produce a concise summary.

- Sentiment Analysis: Imagine a social media analyst who can quickly gauge the mood of thousands of posts.

- Runoff Prediction: Transformer, LSTM, and GRU deep learning methods for predicting runoff in a River basin, and enhanced LSTM and GRU with self-attention.

- Text Classification: Transformer architecture helps dynamically focus on different parts of the input sequence when producing the predictions for text classification.

Accessing Transformer Models

Transformer models are widely available through various machine learning libraries such as TensorFlow, PyTorch, and Hugging Face's Transformers library.

In TensorFlow, pre-trained models can be directly accessed through the tf.keras API. The TFAutoModel class can be used to load any transformer model from the TensorFlow Hub.

With PyTorch, the Hugging Face Transformers library provides a wide array of transformer models with pre-trained weights. These can be accessed and loaded by using the from_pretrained() method on the desired model class.

Besides pre-trained models, these libraries offer tools for fine-tuning the models for specific tasks, including built-in support for many prevalent NLP tasks, like text classification, named entity recognition, and question answering.

Although these models are potent, they demand significant computational resources. Therefore, it's advisable to use hardware acceleration, such as GPUs or TPUs, when working with these models.

When utilizing these models, it's also vital to consider the ethical implications. Transformer models, like all machine learning models, are only as good as the data they were trained on. If the training data contains biases, the model will likely reproduce those biases in its predictions.

Want to build an AI project with Tansformer Acrhitecture?

Navigating AI integration, from training models to deploying custom solutions, can be complex. Our team offers the expertise you need to streamline this process and achieve impactful results.

Seeking support for your AI projects? We're here to assist. From foundational model training to fine-tuning for your specific data, our consultants are ready to elevate your AI initiatives.

Don't miss out on the AI revolution due to uncertainties. Schedule a free consultation with our experts today and unlock the potential of AI for your business.

Act now. Transform tomorrow.