ChatGPT and OpenAI have swept the world away. This new technology has also put a strain on compliance and information security departments. Privacy policies, compliance standards, and data security are all significantly affected by ChatGPT's widespread adoption. Something that is frequently overlooked in organizations despite the severe consequences this has.

Multiple companies, including Samsung and Apple, have recently experienced data breaches due to the improper use of these tools; in Samsung's case, vital trade secrets were leaked to OpenAI's ChatGPT. Italy’s watchdog also temporarily banned ChatGPT for the data breaches.

Now these incidents are definitely concerning. But does that mean you should miss out on ripping the benefits this tool offers?

OpenAI ChatGPT, OpenAI GPT3/4 API and Azure OpenAI Service: Same thing?

You may have heard about ChatGPT, GPT3, GPT4 and OpenAI. And if you are interested in the I resolution going on, You probably have heard about Azure Open AI service. But do they offer the same services? What are the differences?

ChatGPT OpenAI

This is the particular implementation of the GPT model optimized for verbal exchanges. In a conversational setting, it is designed to produce natural responses. Conversational agents, chatbots, and virtual assistants are just some of the uses for the ChatGPT language model.

OpenAI GPT3/4

The OpenAI API is how programmers can gain entry to and use the GPT-3 and GPT-4 models. Part of OpenAI's Generative Pre-trained Transformer (GPT) series, these models can generate new text, translate it, summarize it, and much more in natural language processing. The application programming interface allows programmers to harness the potential of GPT models within their products.

Azure OpenAI Service

The Azure OpenAI Service is a feature of the Microsoft Azure cloud platform. Models built with OpenAI's GPT technology can be deployed and scaled on the Azure platform with the help of this managed service. It allows developers to create and deploy scalable applications that take advantage of GPT models by providing a mechanism for doing so in a cloud setting.

OpenAI GPT3/4 API and the Azure OpenAI Services are a good fit for B2B, Commercial & Enterprise applications. These services make the GPT models available to developers and businesses in various contexts. They provide the adaptability and scalability necessary to incorporate NLP capabilities into enterprise solutions.

On the other hand, OpenAI ChatGPT was developed primarily for use in chat settings. It has some B2B uses, but its primary purpose is to provide users with conversational experiences like chatbots and virtual assistants.

OpenAI vs Azure OpenAI Services

ChatGPT is primarily used for training the language model in the United States, but OpenAI does not disclose where the data is processed. There are three Azure regions where you can deploy OpenAI Services—East and South America, and West Europe. Azure OpenAI's API access is on par with that of OpenAI's services.

While Microsoft manages the encryption keys used by Azure OpenAI Services by default, you can use your keys to encrypt data stored in the service if you prefer. We can use services like private endpoints, which Azure OpenAI Services supports, to centrally filter all communication to the service over the network.

What do GPT, OpenAI API and Azure OpenAI service do with your Data?

Listen from OpenAI here.

OpenAI and GPT3/ GPT4

To enhance ChatGPT's NLP capabilities, OpenAI collects and analyzes your conversations. To preserve users' faith, it employs trustworthy data management procedures, such as:

Data collection purpose

All data entered into Chat GPT is stored on OpenAI servers and used to improve the program's natural language processing capabilities. OpenAI is upfront about the data it collects and why it contains it. Its primary function is to improve language models through user input and to enhance the user experience.

Data storage and retention

Data submitted by OpenAI users is kept safely and by our data retention policies. Information is retained for only what is required to accomplish what was collected. The data is anonymized or deleted after the retention period ends to ensure the users' privacy.

Collaborative data collection and use

Only with your permission or when required by law will your information be shared with outside parties. OpenAI will ensure that all data processors follow the same policies and safeguards when dealing with user information.

But again…

This OpenAI DATA processing is not the same for B2B and B2C audiences.

Data Collection and Data Procession: Azure OpenAI API services

Azure OpenAI deals with the following data:

Prompts and completions

Users can submit prompts and receive service-generated completions by using the completions (/completions, /chat/completions) and embeddings operations.

Data used for both training and testing

To fine-tune an OpenAI model, you can supply your training data as prompt-completion pairs.

Data gained from a training session

After a trained model has been fine-tuned, the service will output metadata on the job, such as tokens processed and validation scores at each stage.

For example, Azure OpenAI API takes your data and processes it this way-

The training data (prompt-completion pairs) submitted to the Fine-tunes API via the Azure OpenAI Studio is pre-processed for quality using automated tools, such as a data format check. The model training section of the Azure OpenAI platform then receives the training data. The OpenAI models' weights are adjusted during training by dividing the training data into smaller batches.

Concerns people have

Unauthorized Access of Confidential Information

A significant concern with AI systems like ChatGPT is that sensitive data may fall into the wrong hands.

Data breaches

Private conversation content could be compromised if an unauthorized party accessed the servers housing ChatGPT. This information could be anything from a person's name and address to their bank account numbers.

Vulnerabilities in the AI system

Artificial intelligence systems are susceptible to flaws, just like any other type of software. There is a risk that these flaws could compromise sensitive data.

Insider threats

ChatGPT could be vulnerable to intrusion from within the hosting company or service. Privileged insiders can abuse their access or leak confidential information on purpose.

Cross-referencing of data

The data used to train ChatGPT comes from various open-source and internet-based resources. The risk of identification or further investigation increases if a user discloses personally identifiable information in a conversation that can be cross-referenced with other sources.

ChatGPT’s training related to Privacy concerns

The possible presence of private or sensitive information in the training data is one privacy problem associated with ChatGPT's instruction. ChatGPT is taught using a massive corpus of text data culled from all over the web. Despite efforts to anonymize and remove personally identifiable information from training data, some sensitive or personal information may still be included.

What made people doubt ChatGPT and Open AI?

Easy to use: Is it opening doors to hackers?

The convenience and availability of ChatGPT are both advantages and potential security and privacy risks. Here are some ways in which this can worry people.

Increased attack surface

Hackers and other bad actors could appear as ChatGPT gains popularity and distribution. The greater the number of users, the greater the number of people who could be attacked. Attacks on AI could exploit security flaws, gain unauthorized access, or trick it into producing lousy material.

Social engineering and phishing

As a conversational tool that can generate human-like responses, ChatGPT could be used in social engineering attacks. Social engineering aims to trick people into doing something they wouldn't normally do, such as giving out private information or clicking on malicious links.

Spread of misinformation or harmful content

The internet serves as a training data source for ChatGPT, which then uses that information to generate responses. ChatGPT could accidentally produce or spread misleading, fraudulent, or malicious content, despite efforts to filter out such materials. The fallout from this could include:

- The dissemination of false information.

- The encouragement of unethical activity.

- Even the infliction of emotional distress on specific persons.

ChatGPTs False Negative and False Positive output

ChatGPT, like any AI system, can potentially give inaccurate results. It hallucinates.

False Negative

When an AI fails to recognize a target or offer the correct response or answer when it should have, it is a false negative. For example, the system falsely reports that an item is absent or fails to match criteria when, in fact, it does. Consequently, chances may be lost or incorrect data presented.

For instance, ChatGPT may incorrectly declare it doesn't know something despite having seen it in its training data when asked a query about a specific event or piece of information.

False Positive

A false positive is when the AI system incorrectly indicates the presence or fulfilment of something. This occurs when the system incorrectly reports that a specific condition has been met or returns an incorrect response.

ChatGPT may provide an answer that reads like common sense but is actually quite dangerous when asked for medical advice. It might be an issue if people need to check it or consult experts to take AI's word for it.

False negatives and positives undermine the accuracy and use of AI systems.

Biased

Conversational replies generated by ChatGPT could be affected by the presence of private or sensitive information in the training data. Because of the potential for unintended disclosure of private information that could be saved and associated with discussions, this raises concerns regarding users' right to privacy and confidentiality.

ChatGPT may pick up on biases or discriminating tendencies from the training data without the developers' knowledge. This threatens the security and fairness of the system by producing answers that reinforce preconceived notions or display biased behaviour.

Disecting Different Compliances of OpenAI

OpenAI is committed to protecting user data, maintaining high-security standards, and complying with applicable privacy regulations, as evidenced by its compliance measures and certificates. OpenAI's goal in implementing these guidelines is to reassure users that their data is safe when using AI systems like ChatGPT.

CCPA (California Consumer Privacy Act)

The California Consumer Privacy Act (CCPA) is a piece of legislation in the United States that protects the privacy of individuals in California. OpenAI has implemented measures to fulfil CCPA obligations and safeguard the personal information of California residents.

GDPR (General Data Protection Regulation)

The General Data Protection Regulation (GDPR) is a sweeping piece of European Union (EU) privacy legislation. OpenAI has taken steps to ensure transparency, data minimization, and user control over their personal information, all required by the General Data Protection Regulation (GDPR).

SOC2 (Service Organization Control 2)

The AICPA created the SOC2 standard for auditors to follow. Protecting the privacy and security of customer information is a top priority for service providers. The efficiency of OpenAI's security and privacy controls has been evaluated through SOC2 audits.

SOC3 (Service Organization Control 3)

A summary of an organization's security and privacy controls, SOC3 is a condensed version of the more detailed SOC2 report. Its intended audience is the general public. OpenAI may provide a Service Organization Controls 3 (SOC3) report to prove it follows best practices and industry standards.

Azure OpenAI’s Compliance Offerings: Same as OpenAI or Better?

Azure OpenAI Service has other compliance offerings.

CSA STAR Attestation

CSA STAR Attestation verifies a cloud service provider's security controls. It's useful for customers in judging the provider's security measures. Regarding security, Azure Open AI can be CSA STAR Attested.

ISO 27001:2013

Information security management systems (ISMS) worldwide should adhere to ISO 27001. It specifies what must be done to set up, roll out, keep up, and better an organization's information security management. When it comes to security, Azure Open AI can follow ISO 27001.

ISO 27017:2015

Cloud service providers and end users can benefit from the information security controls outlined in ISO 27017. It's a supplement to ISO 27001, emphasizing security measures tailored to the cloud. Regarding cloud-based security, Azure Open AI can be adjusted to comply with ISO 27017.

ISO 27018:2019

ISO 27018 is a standard for cloud service providers that emphasizes user privacy. Specifically, it details best practices for securing personally identifiable information (PII) in shared cloud storage services. ISO 27018 can be implemented in Azure Open AI to prove the platform respects user privacy.

ISO 27701:2019

This add-on to ISO 27001 addresses privacy concerns. A Privacy Information Management System (PIMS) and the prerequisites and guidelines for doing so are outlined. ISO 27701 can be used to improve Azure Open AI's privacy management.

HIPAA BAA

HIPAA, the Health Insurance Portability and Accountability Act, mandates safeguards for protected health information (PHI). Business Associate Agreements (BAAs) between Azure Open AI and covered entities facilitate HIPAA compliance when dealing with protected health information (PHI).

PCI 3DS

Online credit card transactions are protected by the PCI 3-D Secure (3DS) standard established by the Payment Card Industry (PCI). It strengthens card transactions' safety and aids in the fight against fraud. When combined with PCI 3DS, Azure Open AI's ability to process payments securely is greatly enhanced.

Germany C5

The German Federal Office for Information Security (BSI) created C5, a certification for data security in Germany. It compares cloud service security to a set of established standards. To prove that Azure Open AI meets German safety requirements, it can be C5 certified.

GPT and GDPR

The General Data Protection Regulation (GDPR) is an EU-mandated set of rules that gives EU citizens more say over using their data online, including the right to erase or correct it. The purpose of the law is to promote openness in how businesses and consumers interact regarding data collection and storage.

GDPR is all about securing personal information online. A company's data collection methods, timing, and security measures must all be made public. Any time a user wants, they can ask for their information to be updated or deleted. These rules are extremely stringent and are widely hailed as the most significant alteration to European Union privacy regulations in history.

But here is the news

On March 1, 2023, OpenAI updated its data usage and retention policies in two major ways.

- Customers' API submissions will only be used for model training or improvement if they permit OpenAI.

- Sharing information is voluntary for users. For abuse and misuse monitoring purposes, API data will be stored for 30 days maximum before being deleted (except where required by law).

Changes to OpenAI's data policy show the company prioritizes the privacy and security of its users' information very seriously. OpenAI's updated features and procedures are designed to give users, and companies more say over their data.

Update On Mar 1, 2023

True, ChatGPT is not confidential. However, OpenAI does not use the information you provide through the GPT-4 API to develop or improve new models.

According to its policies, OpenAI keeps API data for 30 days to detect and prevent misuse. Access to this information is restricted to a small group of authorized OpenAI employees and specialized third-party contractors bound by strict confidentiality and security obligations to monitor and verify any suspected cases of abuse. OpenAI may still employ content classifiers to raise the alarm if data is used maliciously.

How does Azure OpenAI API keep your data safe?

Azure's policies go beyond Privacy and data concerns. Responsible AI principles guide Microsoft's Transparency Notes on Azure OpenAI and descriptions of other products. These notes aim to shed light on the inner workings of Microsoft's AI technology, the options available to system owners that affect system performance and behavior, and the significance of considering all aspects of the system.

Don’t want to share data for OpenAI’s Training? Try this!

If you don’t want OpenAI to utilize your data for the training of the models, you need to simply fill up this form.

Read about the OpenAI Data Control FAQS here.

How to delete your chats on ChatGPT?

Single Chat Delete

If you want to delete your chats on ChatGPT here is a step-by-step guide.

- Select the chat you wish to remove from your history, and then click the trash can icon.

Bulk Conversation Delete

If you want to delete bulk conversation, follow these steps

- Near your email address, you will find three dots. Click on that

- Click on “ Clear Conversation”

How to stop ChatGPT from saving your chats?

- Open the chat menu by clicking on the three dots near the email id

- Tap on “ Settings”

- Tap on Data Control

- Turn off the toggle

How to minimize the false negative and false positive results of OpenAI GPT and Azure OpenAI services?

The Azure OpenAI and GPT-3/4 services have already been pre-trained on publicly available internet text. If you give it a few hints, it can usually figure out what you want it to do and devise a reasonable solution. One common term for this is "few-shot learning."

By training on many more examples than can fit in the prompt, fine-tuning improves upon few-shot learning and enables superior performance across various tasks. Once a model has been optimized, examples in the prompt will no longer be required. This helps keep costs down and makes faster requests possible.

In a nutshell, the following processes make up fine-tuning:

- Get your training data ready and upload it.

- Build a brand-new, optimized model

- Put your refined model to use.

- Improve Prompt engineering

Best prompt engineering practices for better results

- Provide Details. Don't give anyone any room to speculate. Limit the available options.

- Use Specifics. Draw analogies.

- Redouble your efforts. Repeating yourself to the model may be necessary at times. Instructions, cues, and so on should be provided before and after the main content.

- Keep things in order. The model's output may change depending on the order in which you feed the data. The results can vary depending on whether the instructions are given before or after the content ("summarize the following..." vs "summarize the above..."). The order of a few carefully chosen examples can be crucial. Recency bias describes this tendency.

To say updated about OpenAI’s security updates

Subscribe to OpenAI’s Trust Center Updates.

- Visit https://trust.openai.com/

- Click on subscribe

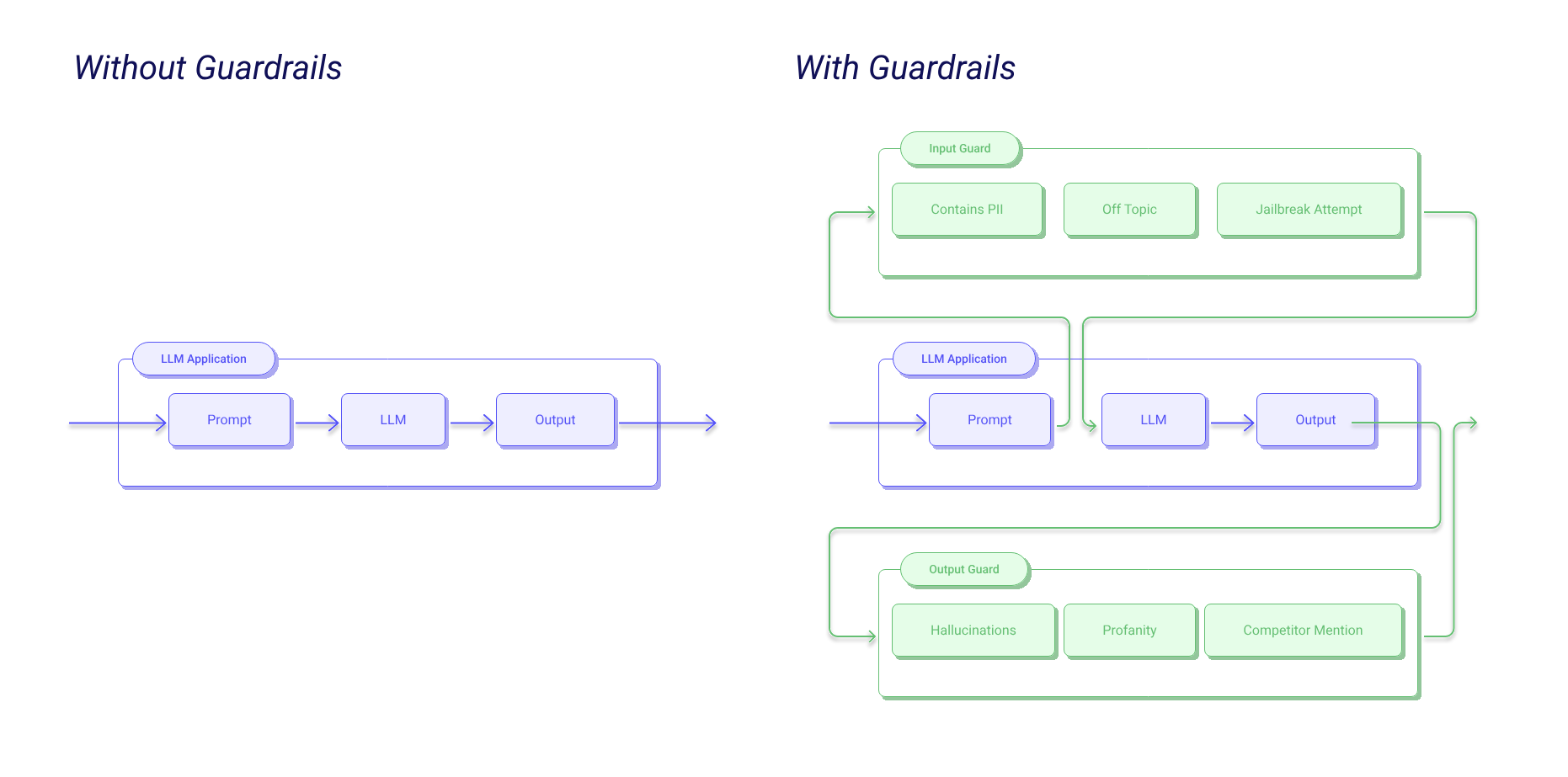

What your technical team can do to protect your production LLM installations?

ChatGPT is not a secret service though it promises it will not save your data if you do not allow it. But you are still confused about your data protection.

We can understand your concern about GPT and any other LLM installation. But there are more ways to protect your business. NER is one of them, and it is used to remove PII.

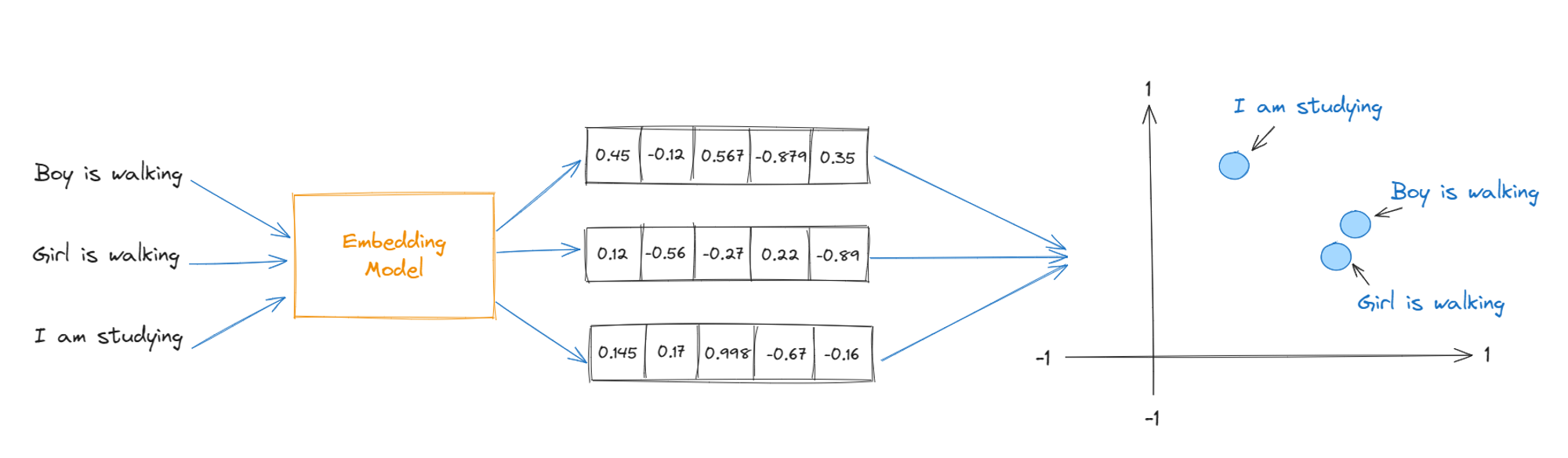

Named Entity Recognition (NER) is a foundational task in natural language processing (NLP) that involves the detection and labeling of proper names (such as those of people, places, and dates) within a text. NER aids in information extraction and contextual comprehension by correctly identifying and classifying these entities.

Multiple methods exist for carrying out NER. One option is using deep learning architectures designed for NER tasks, such as BERT (Bidirectional Encoder Representations from Transformers). These models learn from extensive annotations to accurately identify and categorize named entities. They are adept at navigating nuanced language structures and variants in entity citations.

We follow these general steps to integrate NER with GPT:

Before doing anything with the input text, we identify and extract named entities using a named entity recognition (NER) model or technique. This enables us to recognize the names of people, groups, places, and dates of interest.

We alter the input by substituting generic placeholders or tags for the identified named entities, thereby hiding their identities. This is a helpful step if we don't want GPT to come up with certain names or locations.

We use the named entities as GPT prompts. We can direct the generated responses or narrow the conversational context by constructing prompts that include particular entities.

After the text has been generated with GPT, it can be subjected to NER once more to identify and remove any redundant or irrelevant named entities. This aids in making sure the generated responses are consistent and high-quality.

We generate more appropriate, accurate, and context-appropriate responses by combining NER with GPT so that we can manage and manipulate the named entities in the input text. This method is beneficial when we tailor or manage GPT results according to particular interest classes.

This article was written after extensive research and a collaborative effort of our technical, legal and compliance teams. So if you have any questions regarding ChatGPT’ Data and Privacy or anything else, book a free call here,

.png)