Microsoft Shares fall! On 25th July 2023, Microsoft's shares went down after the company said that growth in some parts of its business had slowed down. This was despite Microsoft's investments in AI, such as its partnerships with OpenAI and Meta.

But, was it a good decision for Microsoft to invest in AI and LLMs? How did it shape the AI wave and LLM-Industry? Let’s dig deep into this!

Good days of Microsoft are gone?

Even though the economy is slowing down, Microsoft made $56.2 billion in revenue, more than Wall Street expected. But the company's most recent earnings report showed that revenue growth for its cloud service Azure slowed down. Azure's revenue grew by only 26% in the fourth quarter, compared to 27% in the previous quarter.

The most recent earnings report is especially noteworthy since we are at a pivotal point in the AI arms race. Tech firms' valuation has risen due to heavy spending on AI-enabled technology like Microsoft's updated Bing search engine chatbot. Many people see this quarter as a key test to see if these tech companies can do what they say they can do.

Microsoft has spent more on capital to improve its AI services for workers at other companies and add assistant features to apps like Word and Outlook. But the company's cloud profit margin has gone down because of these extra costs.

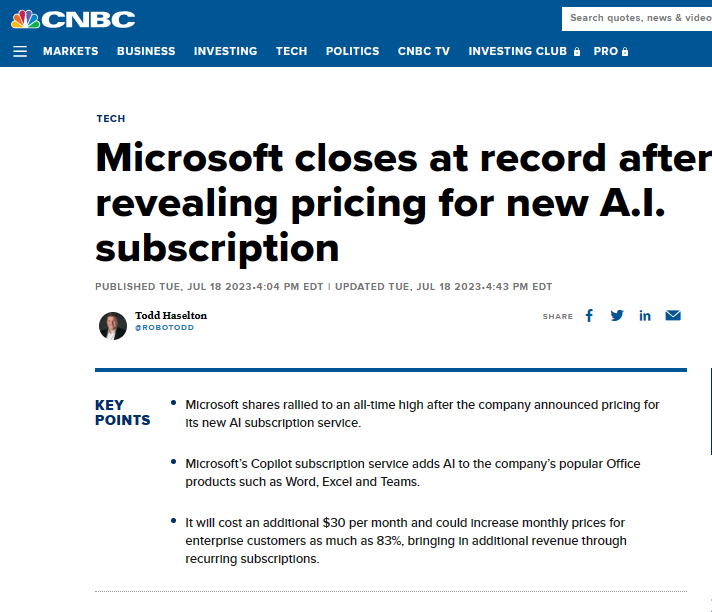

Microsoft recently said that the Copilot assistant for Microsoft 365 apps would cost an extra $30 per month on top of the average monthly prices. When this charge would start was not said by the company. Amy Hood, who is in charge of Microsoft's finances, said that AI services would grow slowly as Azure AI tools become more popular and Copilots like the one for Microsoft 365 become more widely used. The changes should have a bigger effect in the second half of the current fiscal year, which finishes in June 2024.

Companies that sell workplace software, like Atlassian, are rushing to add generative AI features to their products, so the delay in Microsoft's release of its important Office suite could be a missed chance for growth. OpenAI's ChatGPT, which uses Azure, has been getting much attention since it came out in late 2022 and is a good example of creative AI.

LLM Industry: The Past and Present So Far

The origins of modern natural language processing can be traced back to the creation of huge language models. Starting with GPT-1 in 2018, OpenAI has been running a series of large-scale training and fine-tuning competitions to demonstrate the potential of large-scale training for language production. After that, people started taking notice of GPT-2 and its remarkable text-generation abilities.

However, it wasn't until GPT-3 came out in 2020 that LLMs were genuinely challenged. The 175 billion factors used by GPT-3 resulted in very natural and coherent language. It demonstrated the capabilities of LLMs in many contexts, including writing, translation, chatting, and virtual assistance. As a result of GPT-3, researchers and developers in the field of natural language processing now have additional doors to explore. In artificial intelligence, GPT-4, the fourth version of the Generative Pre-trained Transformer series, caused a stir. This model promises improved language creation and broader parameters, expanding on the work of GPT-3. Previous models had flaws GPT-4 attempted to fix, such as skewed results and lack of textual regulation.

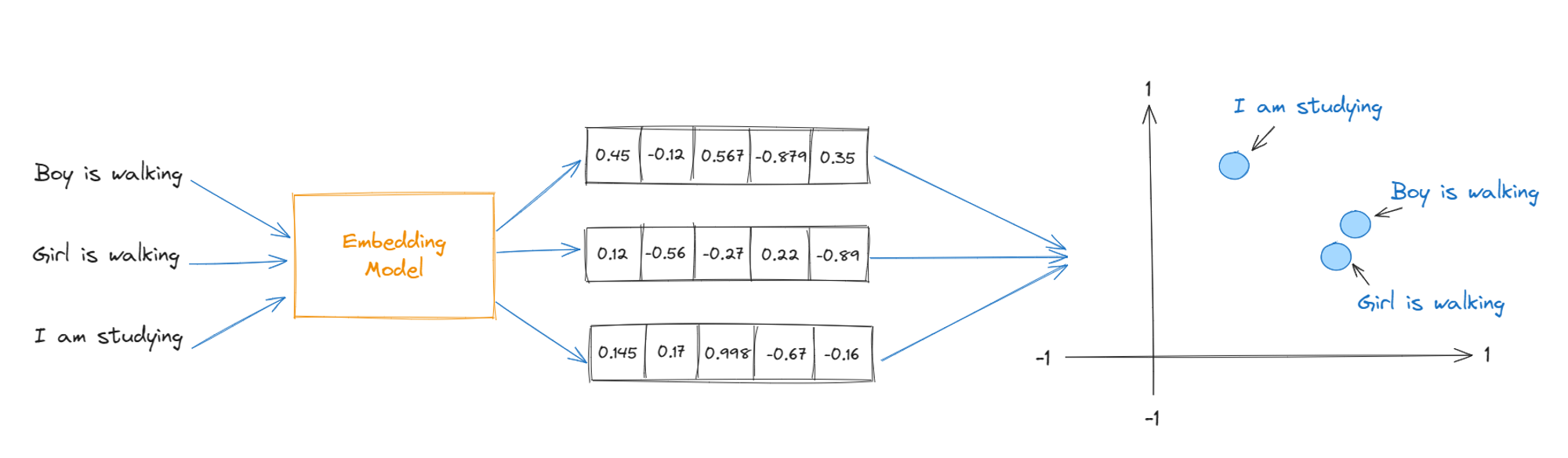

Pre-training LLMs, fine-tuning LLMs, and multimodal LLMs are the three main types of LLMs.

Pre-training models, such as GPT-3/GPT-3.5, T5, and XLNet, acquire knowledge of various linguistic structures and patterns via exposure to big datasets.

By pre-training on large datasets and fine-tuning on smaller, task-specific datasets, fine-tuning models like BERT, RoBERTa, and ALBERT excel at sentiment analysis, question-answering, and text sorting tasks. They are widely used in applications that call for domain-specific language models, such as those used in industry.

Multimodal models like CLIP and DALL-E combine text with different modalities like images and videos to improve language modeling.

According to BI's projections, by 2032, generative AI will have increased its current effect from less than 1% to 10% of total IT hardware, software services, ad spending, and gaming market spending. Revenue growth will be led by digital adverts powered by the technology ($192 billion) and specialized generative AI assistant software ($89 billion) when generative AI infrastructure as a service ($247 billion) is used to train LLMs. AI servers ($132 billion), AI storage ($93 billion), AI products for computer vision ($61 billion), and conversational AI devices ($108 billion) will be the primary drivers of revenue in this sector.

How has the investment in OpenAI impacted the global scenario?

Microsoft put $1 billion into OpenAI in 2019. Still, it wasn't a big deal and didn't get much attention because of the busy startup market and other high-value industries like electric cars, logistics, and aerospace.

After three years, the world of startups has changed significantly. Startup funding has dropped a lot, mainly because tech companies are growing quickly but still losing money and have lost value on the stock market. But one area that has stood out is artificial intelligence, incredibly generative AI, which is made up of technologies that can make text, visual, and audio replies that are generated automatically.

OpenAI has become the most exciting private company in this field. The launch of ChatGPT, a chatbot known for its human-like answers to a wide range of topics, went viral and helped OpenAI's image even more.

Microsoft's first investment in OpenAI, which didn't get much attention, is now a major topic of conversation among venture capitalists and public owners. According to reports, Microsoft's investments in OpenAI have hit a staggering $13 billion, and the startup is now worth about $29 billion.

But Microsoft's participation is more than just giving money. The tech giant is OpenAI's only source of computing power for study, products, and developer programming interfaces. Because of this, many startups and big companies, including Microsoft, have rushed to combine their products with OpenAI. This has put a huge amount of work on Microsoft's cloud servers.

It's important to note that after Microsoft gets its money back, it will still get a share of OpenAI LP's income up to an agreed-upon limit. The rest of the money will go to the nonprofit organization. A representative from OpenAI gave this information, but a representative from Microsoft didn't want to say anything about it. The mutually beneficial relationship between Microsoft and OpenAI has become an important part of how investors and other interested parties judge the future value of Microsoft stock.

Microsoft vs the Other Big Names in this war field of LLMs : Google and Meta

Google’s PaLM

PaLM is a language model showcasing the Pathways system's use to achieve large-scale training using 6144 chips, making it the largest TPU-based system configuration for training to date. The training process involves data parallelism at the Pod level across two Cloud TPU v4 Pods and standard data and model parallelism within each Pod. This represents a significant increase in scale compared to previous large language models (LLMs) that were trained on smaller configurations.

PaLM achieves an impressive training efficiency of 57.8% hardware FLOPs utilization, the highest achieved for LLMs at this scale. This efficiency is attributed to a combination of the parallelism strategy and a reformulation of the Transformer block, allowing for attention and feedforward layers to be computed in parallel, benefiting from TPU compiler optimizations.

To train PaLM, a diverse dataset was used, which includes English and multilingual data from various sources such as web documents, books, Wikipedia, conversations, and GitHub code. A special "lossless" vocabulary was created to preserve whitespace, handle out-of-vocabulary Unicode characters, and split numbers into individual tokens for better processing.

Meta’s reaction

Companies with more than 700 million monthly active users are required by Meta's Llama 2 license to enter into a separate license agreement with Meta. This restriction makes it difficult for other tech behemoths to develop the system for reasons that aren't entirely clear. The approved use policy included with the model forbids using it to facilitate illegal or abusive behavior or to target or harass a vulnerable population. When asked what would happen if someone misused Llama 2, Meta did not answer.

The most recent release of WizardLM is an artificial intelligence system that may be used in a manner analogous to ChatGPT, namely, to carry out elaborate directives. Currently, Llama 2 variants make up eight of the ten most popular models on Hugging Face. Many of these models are designed to simulate natural speech.

How significant is this AI investment for Microsoft?

Microsoft wants to be on the cutting edge of AI development because the company recognizes the enormous social potential of this technology. Microsoft's investment in OpenAI gives the company access to the latest developments in artificial intelligence research and technology, which in turn may be leveraged to improve Microsoft's own offerings.

The growing significance of AI in the IT sector is another reason for Microsoft's involvement in OpenAI. It is becoming increasingly crucial for Microsoft to maintain its competitive edge in this field, as many businesses use AI in their products and services. Microsoft can strengthen its products by tapping into OpenAI's pool of top artificial intelligence (AI) expertise and technology.

Microsoft's investment in OpenAI is wise for strategic reasons and has substantial financial returns. The company's artificial intelligence research can be applied to refining existing goods like the Microsoft Office suite and creating brand-new ones. Microsoft is able to maintain its position as a market leader in the technology sector thanks to its dedication to cutting-edge research in artificial intelligence.

Microsoft's commitment to OpenAI is partly due to the belief that AI is the key to resolving many of the world's most intractable issues. OpenAI's mission is to create AI that can be used to do good in the world, such as combating climate change, enhancing healthcare, and reducing global poverty. Microsoft is able to make a meaningful contribution to these initiatives through funding OpenAI.

It's important to remember that Microsoft supports OpenAI beyond monetary contributions. The company also maintains a solid partnership with OpenAI, facilitating joint R&D efforts. Azure Cognitive Services is a set of APIs for things like natural language processing, computer vision, and speech recognition, and it's just one example of how Microsoft has been employing OpenAI's AI models to enhance its products. Microsoft can advance artificial intelligence research and development thanks to their strong partnership.

Five examples of Microsoft using Open AI:

The Cognitive Services in Azure

Microsoft has begun incorporating OpenAI's AI models into Azure Cognitive Services, a set of application programming interfaces (APIs) for tasks including NLP, CV, and speech recognition. Because of this, programmers may quickly and easily integrate AI features into their software.

The ability to communicate

To facilitate adding natural language understanding capabilities to apps, Microsoft has incorporated OpenAI's GPT-3 language model into its Language Understanding service.

Robotics

Microsoft and OpenAI have been collaborating to create AI-enabled robots for use in logistics.

Gaming

Microsoft has incorporated OpenAI's AI models into the Xbox One to enhance the gaming experience. The company has implemented OpenAI's AI in various ways, including making the games' NPCs more exciting and engaging.

Healthcare

Microsoft and OpenAI have been collaborating to create AI-driven solutions for use in healthcare that can aid in diagnosing and treating patients. Microsoft, for instance, has used OpenAI's AI by analyzing medical photos for abnormalities like cancers and providing that information to clinicians.

But what about the concerns of AI?

Be it Google’s AI or Microsoft, there are some real concerns about AI.

Concerns about AI

Eliminating bias from AI data is important because it affects how well and fairly the program works. For example, there are more white faces in the ImageNet database than non-white faces, which makes facial recognition systems more likely to make mistakes. Considering this, it's important to be aware of possible bias when teaching AI to ensure it accurately reflects society.

The growing use of AI raises concerns about power and making decisions. Some AI systems, like those in self-driving cars or high-frequency trade, have to make decisions in a split second without human help. Finding a good mix between AI control and human oversight is a tough ethical problem.

Privacy has been an issue with AI for a long time. Data is needed to train AI, but there are problems when data is collected from children or used without their permission. Regulations must protect user data and address how companies use data to make money.

When big companies like Amazon, Google, and Facebook use AI often, people worry about power shifts and monopolies. Countries like China also have big plans for AI, which raises questions about global fairness and how wealth is shared in the race for AI.

Another ethical problem is who owns the content made by AI. Who owns the rights to the music, text, or deepfake movies that AI made? To solve this problem, rules, and roles must be clarified.

The effects of AI on the world should not be forgotten. AI training uses a lot of energy, which raises questions about how ethically it can be used to solve urgent world problems.

Lastly, the progress of AI could make people feel weak and make us question what it means to be human. As AI takes over more jobs, we need to learn how to live with smart tools in a way that treats both people and technology with respect.

Responsible AI research

Microsoft's approach to AI is based on a pledge to make AI that is designed to be responsible. The company's mindset is based on principles such as fairness, reliability and safety, privacy and security, openness, transparency, and accountability. They think it's important to use AI to make the world a better place, and they try to put these ideas into practice throughout the organization.

Microsoft has put together a team of experts who work in different parts of the company to make sure that these AI rules and principles are followed. These community members are involved in policy, engineering, research, sales, and other core tasks. They make sure that responsible AI considerations are built into every part of their business.

As part of their responsible AI process, the AI systems at Microsoft are reviewed in depth by a team of experts from many different fields. This review helps them understand possible problems and how to fix them. This lets them improve datasets, use filters to stop the creation of harmful content, and use technology to get more useful and varied results.

Also, Microsoft uses AI technologies slowly and on purpose. They might start with small previews for a few customers with well-defined use cases to ensure responsible AI controls work in real life before making them available to more people.

Microsoft also thinks that working with people outside of the company is important. They know it's important to work with governments, universities, civil society, and businesses to advance the state of the art and deal with the problems and risks that come with AI technology.

What can we expect next?

We might expect two new things-

Models that can generate their own training data

LLMs can potentially bootstrap their own intelligence by generating new written content based on the knowledge they have absorbed from external sources during training. They can then use this generated content as additional training data to improve their performance on various language tasks. This approach could be crucial as the world faces a potential shortage of text training data.

Models that can fact-check themselves

Current LLMs, like ChatGPT, suffer from factual unreliability and often produce inaccurate or false information. Efforts are underway to mitigate this issue by allowing LLMs to retrieve data from external sources and provide references and citations for their responses. Models like WebGPT and Sparrow have shown promising results in providing more accurate and trusted information.

P.S.- If you need help with AI integration, Ionio can help you! Schedule a free call with our CEO Rohan today!

.png)