Imagine providing your customers a seamless experience where they can see how exactly your products look on them—not just on idealized model figures, but customized to their own shape and size. This isn't just about enhancing the shopping experience; it's about revolutionizing it.

With Virtual Try-On(VTON) technology, your business can help customers feel sure about their choices and enjoy shopping in a whole new way. Are you ready to see how this simple yet powerful tool can help your business connect more closely with your shoppers? Let's dive right in!

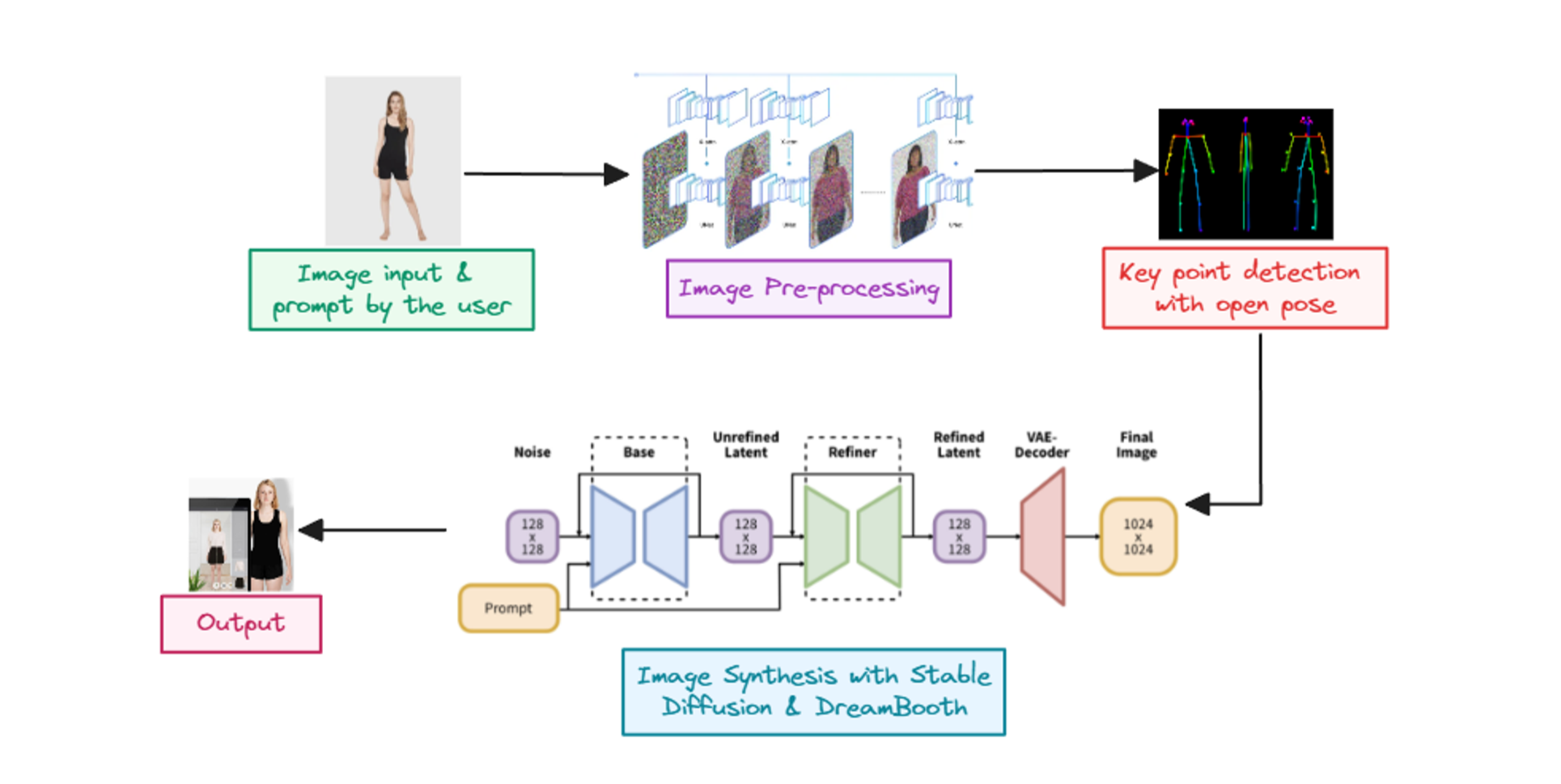

VTON technologies are more than just digital dressing rooms; they're a complex integration of AI models like stable diffusion, DreamBooth, convolutional neural networks (CNNs), and ControlNet. These tools work together in a pipeline to create realistic and personalized shopping experiences that can transform the way we think about buying stuff online. If you're curious about the whys and hows of the technical aspects, you can read more about these technologies in our detailed blogs here.

How Virtual Clothing Try-On Works?

Virtual try-on technology employs several advanced tools to create a realistic and interactive shopping experience. Stable diffusion and DreamBooth generate detailed, customized images of clothing items by gradually transforming noise into structured images. The EfficientNetB3 CNN model facilitates precise feature extraction from these images, ensuring the clothes look realistic on different body types. Additionally, OpenPose is utilized to estimate the keypoints on a person's image, aiding in accurately mapping the clothing onto the user's body based on their posture and movements. Visit the Hugging Face Model Hub if you want the model files.

Virtual Try-On Pipeline

The VTON pipeline begins by capturing neutral garment images and the person’s image, from which a Control Point Regressor (Rc) identifies key control points based on the body's pose, essential for draping the garment accurately. Following the layout prediction, the system calculates a warp transformation that aligns the garment onto the user’s body. This involves adjusting the garment image to fit the body’s contours based on the initial semantic layout. Rendering policies then adjust these control points to simulate different styles or alterations, like opening a jacket's bottom wider. Adjusted control points guide the warping function, ensuring the garment fits the person's body accurately.

Finally, an image generator synthesizes the final image, displaying the garment as worn by the person, adjusted to the specified style. This entire process involves sophisticated AI components that enhance realism and provide flexibility in garment presentation, crucial for both designers and online retailers.

Feature Extraction using the EfficientNetB3 CNN model

Following pre-processing, the pipeline extracts detailed features from the clothing images using models like EfficientNetB3. This step involves analyzing textures, colors, and patterns critical for realistic rendering. Feature extraction serves a foundational role similar to detecting control points in advanced VTON systems, as it determines how textiles and designs adapt to different body shapes and movements in the virtual environment.

Stable Diffusion

Stable Diffusion is employed to generate highly realistic and detailed images of clothing. This step in the VTON pipeline takes random noise and incrementally structures it into images that not only appear lifelike but are also customized to match specific styles or attributes. This is analogous to fine-tuning the appearance and drape of a garment to ensure it matches the desired look on different virtual body types, which mirrors the control point adjustment in more advanced VTON systems.

DreamBooth's Role in Customization

DreamBooth enhances the capabilities of Stable Diffusion by customizing the generative process to align with specific clothing attributes or brand styles. This tailoring ensures that the virtual garments are not only realistic but also brand-consistent, offering a personalized shopping experience. By focusing on particular styles, DreamBooth effectively adapts the clothing images for various body shapes, akin to adjusting control points for optimal fit in complex outfits.

OpenPose for Accurate Positioning

OpenPose detects key points on the user’s pose, crucial for aligning clothes properly in a VTON system. It identifies how the user's body is positioned and moves, which helps in placing the garments accurately during the virtual try-on. This step is particularly important for ensuring that adjustments made to control points in the pipeline result in realistically draped garments that react naturally to the user's movements. To enhance the realism and allow customization of the garment's fit and drape, the pipeline uses garment control points. These points represent key positions on the garment that influence how it fits and moves with the body. Users can manipulate these points to achieve different styles and fits, essentially customizing how the garment looks on them.

Image Synthesis

The final step is the synthesis of the adjusted image where the warped garment is combined with the user’s body image. This synthesis is performed by an image generator that utilizes stable diffusion and other complex mechanisms that take the warped garment, the original user image, and the adjusted layout as inputs. The generator employs these models to seamlessly blend the images, producing a final output that looks realistic and matches the user's desired appearance.

To dig deeper into the technical details of VTON pipeline, visit this research paper by University of Maryland.

Demonstration

For a better understanding of VTON, we’ll use Outfit Anyone, which enhances the VTON technology by employing a two-stream conditional diffusion model, designed to address challenges in generating consistent and high-quality images in VTONs. Here's a breakdown of the technical aspects:

- Two-stream conditional diffusion model: This model uses two separate processes: one stream processes the garment images, and the other stream manages the integration with the wearer’s body. This separation allows for more precise control over how clothes fit and deform according to body movement and posture.

- Garment deformation: The technology specifically focuses on how garments adjust to different body shapes and movements, ensuring that the virtual clothing appears realistic and moves naturally with the user.

- Scalability: Outfit Anyone is designed to handle various factors such as changes in pose, body shape, and even different styles from realistic to animated (anime) appearances. This makes it versatile across different scenarios and user needs.

- Broad applicability: The model's robustness allows it to be applied not just in controlled environments but also in more dynamic, real-world settings ("in-the-wild" images), making it practical for everyday use in e-commerce.

.png)

For a practical demonstration of how Outfit Anyone enhances the VTON experience, let's explore an example of using the Hugging Face space dedicated to this Outfit Anyone. Here's how the space looks—

.png)

- Upload Your Garment: Begin by uploading an image of the garment you want to try on. If it's a one-piece dress or coat, upload it to the 'top garment' section and leave the 'lower garment' section empty. For demonstration, they’ve provided a few example outfits, I’m using this image for now-

.png)

- As the outfit is a one-piece dress, I’ll use the lower garment section empty.

- Model Processing: Once the garment image is uploaded, Outfit Anyone uses its two-stream conditional diffusion model to process the garment separately from the user's body image. This ensures that the clothing adjusts realistically to different postures and body shapes.

- Results: After processing, the model overlays the garment onto a body image, showing how the garment conforms to various movements and poses.

.png)

Advantages of Using Virtual Try-On in Retail

Let’s consider a large-scale retailer like Company A, which operates traditionally without personalized digital experiences but has real good scale of production and quality of products. Now, compare it to Company B, which has incorporated AR and VR technologies for VTONs. Company B offers a more engaging shopping experience, allowing customers to see how clothes fit on their digital avatars, matching their body type and size. This approach not only delights customers but also makes the shopping experience much more personal and satisfying.

Enhanced Customer Engagement

VTON technology significantly enhances customer engagement by enabling a dynamic and interactive shopping experience. When customers can see how clothes fit their own bodies via augmented reality, their involvement and interest in the product increase. This leads to longer browsing sessions on retail platforms, as users enjoy the novel experience of virtually trying on different outfits, mixing and matching styles without the need to physically change clothes.

Boosted Conversion Rates

VTON technology has proven to boost conversion rates for retailers. By providing a realistic visualization of how garments look on customers, VTON reduces the uncertainty that often accompanies online shopping. This confidence boosts are especially evident during the decision-making process, where seeing the actual fit and drape of clothing on one's avatar can persuade a hesitant shopper to complete a purchase, leading to higher sales and reduced cart abandonment rates.

Company B, using VTON tech, sees higher conversion rates than Company A. Customers at Company B can experiment with different styles virtually, boosting their confidence in purchases, leading to more completed sales and fewer abandoned carts.

Reduction in Return Rates

One of the most tangible benefits of implementing VTON technology is the significant reduction in return rates. By allowing customers to preview how clothes will fit before purchasing, VTON minimizes discrepancies between expectation and reality. This accuracy not only satisfies customers but also reduces the costly logistics involved in processing returns, thereby saving retailers considerable resources in reverse logistics.

With VTON, Company B experiences significantly lower return rates compared to Company A. Customers are less likely to return clothes when they have a clear preview of how these items fit, reducing the costs and logistics associated with handling returns.

Broad Market Appeal

Virtual try-on technology democratizes access to fashion, especially for those unable to visit physical stores—be it due to geographic limitations, physical disabilities, or time constraints. By incorporating VTON, retailers can tap into a broader audience, including people who typically do not shop online due to uncertainty about product fit. Furthermore, VTON can cater to diverse body types, promoting inclusivity by showing how clothes look on various shapes and sizes, thus broadening its appeal and fostering a more inclusive shopping environment.

While Company A sticks to traditional methods, Company B leverages its VTON technology as a key selling point, attracting tech-savvy shoppers and setting a trend in the market. This innovation not only attracts customers looking for a modern shopping experience but also positions Company B as a forward-thinking leader in retail technology.

The growing adoption of VTON technology, evidenced by a 60% awareness rate among consumers, highlights its potential to significantly enhance the shopping experience, according to McKinsey reports. VTO not only addresses practical issues like high return rates by enabling more accurate previews of products, but it also boosts consumer engagement and satisfaction. And as augmented and virtual reality technologies continue to evolve, virtual try-on systems will become even more immersive, allowing users to experience a virtual fitting room environment from their homes.

The significant revenue share held by Head-mounted Devices (64% in 2022 as per Grand View Research) underscores the technological advancements and increasing consumer acceptance of immersive retail experiences. These devices play a crucial role in delivering realistic try-on capabilities, expected to continue dominating the market.

Moreover, the application of AI and machine learning in VTON allows for highly personalized shopping experiences. By analyzing customer preferences and behavior, retailers can offer tailored recommendations, thereby increasing conversion rates and fostering customer loyalty

Conclusion

As the retail landscape evolves, VTON technology stands at the forefront, offering revolutionary ways to enhance the shopping experience. With its capability to merge high-tech visuals and user interactivity, VTON not only simplifies shopping but also enriches it, making it more accessible and enjoyable for users everywhere.

Ready to Transform Your Retail Experience?

Curious about integrating Virtual Try-On technology into your business? Book a consultation with us! You'll meet with Rohan and our AI Research team, who can help tailor a Virtual Try-On solution that fits your brand's unique needs. Together, we'll explore how VTON can elevate your business strategy and assist in your growth.