👋 Introduction

As businesses increasingly turn to AI for value generation, the applications of neural network-based techniques and Large Language Models (LLMs) have become pivotal. Advancements in natural language processing have empowered organizations to address various text-related challenges, including classification, summarization, and controlled text creation. While third-party APIs may be convenient, fine-tuning models with proprietary data yields cost-effective and domain-specific solutions that can be securely deployed across various environments. However, choosing the right strategy for fine-tuning is critical.

This blog explores one of the most popular and effective methods for parameter-efficient fine-tuning: Low-Rank Adaptation (LoRA), with a specific focus on QLoRA, an even more efficient variant. The goal is to take an open large language model and fine-tune it to generate a proper response based on the given message. For this exercise, we have chosen the TheBloke/Mistral-7B-Instruct-v0.2-GPTQ model, which is open-source with a permissive license (Apache 2.0), and the Samhita/slack-data-long-responses dataset, both available for download from the HuggingFace Hub.

What is PEFT Finetuning?

PEFT Fine-tuning, or Parameter Efficient Fine-tuning, is a set of techniques designed to make model training more efficient. Traditional training methods often involve a large number of trainable parameters, which can be resource-intensive and time-consuming. PEFT techniques, such as Prefix Tuning, P-tuning, and LoRA, reduce the number of parameters, leading to faster and more cost-effective training. For example, in natural language processing, PEFT techniques have been used to improve model performance while reducing resource consumption.

Benefits of PEFT Fine-tuning

Parameter Efficient Fine-tuning (PEFT) offers several compelling benefits, particularly for enterprises and large businesses seeking to fine-tune Large Language Models (LLMs) like BERT and GPT. These benefits include:

- Time Savings: By reducing the number of trainable parameters, PEFT accelerates both the training and testing processes. This efficiency allows for quicker exploration of different models, datasets, and techniques, ultimately saving valuable time in model development and deployment.

- Cost Efficiency: PEFT's memory optimizations enable training on less powerful computational resources, resulting in reduced costs associated with training on large datasets. This cost-effectiveness makes PEFT particularly attractive for businesses looking to scale their AI capabilities without significantly increasing their infrastructure costs.

- Improved Model Performance: Despite the reduction in trainable parameters, PEFT techniques often lead to improved model performance. By focusing on optimizing the most important parameters, PEFT can enhance the overall effectiveness and accuracy of LLMs, leading to better results in various natural language processing tasks.

What is LoRA & QLoRA?

LoRA, short for Low-Rank Adaptation, is a breakthrough in the field of LLM. Traditionally, fine-tuning such models involved adjusting all the weights in the pre-trained model's weight matrix. LoRA, however, takes a different approach. It fine-tunes two smaller matrices that approximate the larger weight matrix, known as the LoRA adapter. This fine-tuned adapter is then loaded into the pre-trained model for use during inference.

QLoRA takes this efficiency further by leveraging quantization techniques. It loads the pre-trained model's weights onto the GPU memory as quantized 4-bit weights, as opposed to the 8-bits used in LoRA. Despite this reduction in memory usage, QLoRA maintains a similar level of effectiveness as LoRA. By probing and comparing these methods, and optimizing QLoRA's hyperparameters, we aim to achieve optimal performance with the quickest training time.

Implemented in the Hugging Face Parameter Efficient Fine-Tuning (PEFT) library, LoRA offers ease of use. QLoRA can be utilized by combining bitsandbytes and PEFT. Additionally, We are going to use the HuggingFace Transformer Reinforcement Learning (TRL) library which provides a convenient trainer for supervised fine-tuning with seamless integration for LoRA. These libraries offer the necessary tools to fine-tune a chosen pre-trained model for generating coherent and convincing product descriptions based on specific attributes

Setting up the environment

Here, we install all required Python libraries and modules. They help with training efficiency (accelerate), allow for low-rank adaptations (PERF), facilitate quantized training (bitsandbytes), and give access to pre-trained models and tools (transformers).

Preparing Data for Supervised Fine-tuning

Before we can effectively utilize PEFT for fine-tuning a model for following instructions, it's crucial to prepare the data in a format suitable for supervised fine-tuning. Supervised fine-tuning involves training a pre-trained model to generate text based on a given prompt. This process is supervised because the model is fine-tuned on a dataset containing prompt-response pairs formatted consistently.

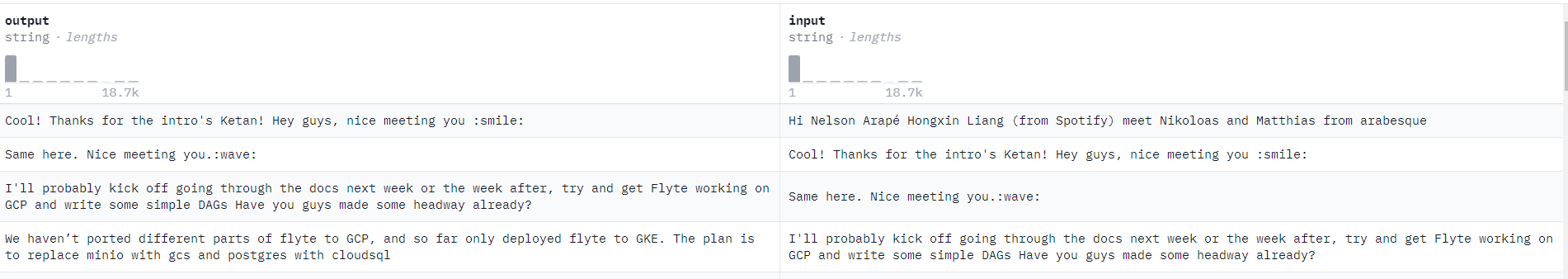

Here is an example of our chosen dataset from the Hugging Face Hub looks as follows:

As useful as this dataset is, this is not well formatted for fine-tuning of a language model for instruction following in the manner described above.

The following code snippet loads the dataset from the Hugging Face hub into the memory and transforms the necessary fields into the consistently formatted string representing the instruction, input, and response.

The resulting prompts will be added to the formatted_data column in the data frame. Which are then loaded into a hugging face dataset for supervised fine-tuning. In the prompt, we have used the [INST] special token to leverage instruction fine-tuning. You can read more about the special token here.

Testing model performance before Fine Tuning

It's useful to evaluate the performance of a pre-trained model without any modifications to establish a baseline before fine-tuning.

The model can be loaded in 8-bit format and evaluated according to the format specified in the model card on Hugging Face for initial performance assessment.

A

Configure LoRA parameters

When using PEFT to train a model with LoRA or QLoRA, the hyperparameters of the low-rank adaptation process can be defined in a LoRA config. Two key hyperparameters, "r" and "target_modules," significantly affect adaptation quality and are the focus of the tests that follow.

- Rank (r): This parameter determines the rank of the low-rank matrices learned during the fine-tuning process. A lower rank may lead to quicker but potentially lower-quality model training. Increasing r beyond a certain value may not yield significant quality improvements. The value of r and its effect on adaptation quality will be tested.

- Target Modules: This parameter specifies which modules in the model architecture to target during LoRA adaptation. While it's common practice to target only the attention blocks of the transformer to reduce training time and compute resources, recent work suggests that targeting all linear layers may improve adaptation quality.

Additionally, for QLoRA specifically, the pretrained models are frozen in 4-bit during the fine-tuning process, requiring a rank of 64 for the low-rank approximation. Adjusting these parameters, along with the dropout rate and lora_alpha, allows for fine-tuning the model based on performance and resource considerations, finding the optimal setup for the task at hand.

Setting up the training parameters

Define training arguments and create a Trainer instance. A note on training: To perform fine-tuning, the following steps are required:

- Define the LoRA Configuration: Set up the LoRA configuration as discussed earlier, specifying parameters like rank and target modules.

- Prepare the Data: Split the prepped instruction following data into train and test sets and convert them into Hugging Face Dataset objects.

- Define Training Arguments: Set the number of epochs, batch size, and other relevant training hyperparameters. These parameters will remain constant throughout the training process.

Let's evaluate the performance of the model after fine-tuning it. We saved the refined model after training and will load it for testing. We will use the same query as before to test the response of the fine-tuned model.

Query :

Response :

The model has clearly been adapted for generating more consistent response. During training, only 0.7% of the total parameters were used, and the process was completed in an impressive time of two hours. This was achieved despite training only two of the attention layers. By making adjustments to the target_layer and r parameters, you can observe changes in the model's performance and resource consumption. This can help you find the optimal configuration for your specific task.

QLoRA Fine-tuning using HuggingFace

To perform QLoRA fine-tuning with Hugging Face, you'll need to install BitsandBytes library. It handles the 4-bit quantization and manages low-precision storage and high-precision compute operations. Learn more about the quantize a model over here.

To load the modal using 4-bit quantization and set up the tokenizer, follow these steps:

After setting up the model and tokenizer, you can proceed with normal training using the HF trainer.

The hyperparameters for QLoRA that were tried out are r=8, and they only target the attention blocks, namely "q_proj" and "v_proj" for adaptation. Now, we will attempt to evaluate the model again on the same query and observe the response.

Response :

The model's response is now very concise and well-structured. It's logical and relevant. As a reminder, these relatively high-quality results are achieved by fine-tuning less than 1% of the model's weights. According to research, it has been observed that modifying the value of "r" does not lead to any significant improvement in the quality of adaptation beyond a certain threshold. The most substantial enhancement is observed when all linear layers are targeted during the adaptation process, rather than just the attention blocks.

Best Practices for Fine-Tuning with LoRA and QLoRA

- Optimal Scaling Coefficient: According to the original LoRA paper, choosing the scaling coefficient alpha as two times the rank parameter r often results in the best outcomes. This means setting alpha = 2 * r. However, it can be beneficial to experiment with different ratios to find the optimal configuration for your specific task and model.

- Enable LoRA for More Layers: While experiments often focus on enabling LoRA for select weight matrices, such as the Key and Value matrices in each transformer layer, consider enabling LoRA for additional layers, such as Query matrices, linear layers between multihead attention blocks, and the linear output layer. This can increase the number of trainable parameters and memory requirements but may improve modeling performance noticeably. Learn more about this here.

- Optimize Adapter Usage: When using adapters, understand that the size of the LoRA adapter obtained through fine-tuning is typically small compared to the pre-trained base model. During inference, both the adapter and pretrained model need to be loaded, maintaining similar memory requirements. Merging the weights of the pre-trained model and the adapter can slightly increase inference latency but can be done efficiently using the PEFT library with a single line of code.

- Consider the Trade-offs of Merging Adapters: While merging adapters can reduce inference latency, it eliminates the ability to efficiently use a single large pre-trainedmodel with task-specific adapters. The decision to merge weights depends on the specific use case and acceptable inference latency.

Conclusion

In conclusion, fine-tuning a model for your business can significantly improve its performance and customization. This process allows you to tailor the model to your specific needs and knowledge, providing a level of customization that standard models may not offer. Whether you're using an RAG pipeline or need a more in-depth customization, fine-tuning can help you achieve your goals effectively.

Elevate Your Business with Generative AI

The field of artificial intelligence is constantly changing and many businesses are automating their workflows and making their life easier by integrating AI solutions in their products.

Do you have an idea waiting to be realized? Book a call to explore the possibilities of generative AI for your business.

Thanks for reading 😄.

.png)